This article was written in collaboration with BCG's Center for Leadership in Cyber Strategy.

Consider the following scenarios involving AI agents:

- Attackers influence the chatbot of a wellness spa to recommend unsafe products by altering the agent’s understanding of user preferences. Several customers wind up in the emergency room.

- Hackers insert themselves between a bank’s consumer loan chatbot and its back-end services, stealing sensitive customer information—income details, loan approval status, and personal identifiers—that was being transmitted without proper encryption. They also secure 0% loans.

- An AI agent working at the direction of a confidential M&A team circulates information on a target company to a broader group that includes the spouse of the target’s CFO.

- An AI agent in procurement auto-renews a multimillion-dollar contract with an underperforming vendor because it is not part of a feedback loop on user experiences.

The scenarios are real—or really possible. The first two, involving fully functional AI agents, came to light in exercises organized by BCG and Mandiant, Google’s incident response and threat intelligence arm. The other two are creations of ChatGPT, that is, of GenAI itself. While AI agents are powerful tools that can help companies achieve their most important business objectives, unsecured they come with big risks that have potentially catastrophic financial, reputational, and legal ramifications.

Stay ahead with BCG insights on digital, technology, and data

Neither bespoke nor out-of-the box agents are safe to start with. Safeguards and guardrails need to be designed, built in, monitored, and adjusted. But security also needs to be implemented at scale in ways that do not slow down or undermine the agent’s performance. Since these technologies are still in their infancy, most organizations are not yet familiar with implementing critical systems. They lack a structured approach to handle the complexity, understand agents’ interactions with legacy systems, and manage governance effectively.

To help organizations structure their approach to securely deploying GenAI agentic systems, BCG developed the Framework for Agentic AI Secure Transformation, or FAST. FAST is a comprehensive strategic, technical, and organizational assessment model. It enables companies to evaluate where they stand today and identify the capabilities required to ensure the safe and reliable deployment of AI agents, either as a proof of concept or in a full-scale transformation.

A Two-Edged Cybersword

BCG has been working with clients to build and implement AI agents since 2023. We have seen agents create huge transformative value that goes far beyond simple automation to include material increases in revenues, EBIT, market share, and customer satisfaction scores. Little surprise that the market for agents is expected to grow by 45% a year between 2024 and 2030, according to Grand View Research.

But agents are a double-edged sword, as the examples above show. Their potential to transform industries is matched only by the risks they introduce, creating a dynamic challenge for organizations. Agents’ inherent flexibility enables powerful applications—from intuitive customer support chatbots to virtual assistants capable of complex decision making—but it also significantly increases the potential risk.

A key contributor to this expanded risk exposure is natural language processing. While traditional software systems operate within predictable parameters, natural language processing thrives on dynamic user interactions, interpreting words, phrases, and emotions to generate real-time outputs. Malicious actors have learned to manipulate this adaptability through such techniques as prompt injection, context poisoning, and API exploitation, causing not just theoretical vulnerabilities but concrete, demonstrable risks. Three types of risk are especially worrisome:

- Semantic Prompt Manipulation. Prompts are the lifeblood of AI agents, guiding their responses and shaping their behavior. But the flexibility of prompts and the adaptability of agents make systems inherently unpredictable. Even seemingly benign inputs can yield unintended outputs and enable invading actors to craft, or copy and paste, malignant prompts designed to manipulate AI into revealing sensitive information or performing unauthorized actions.

- Lateral System Spread. GenAI often interfaces with multiple interconnected components and third-party services, such as APIs, databases, authentication services, and cloud platforms. Each integration point becomes a potential entry point for adversarial exploitation. The challenge is magnified by the nondeterministic nature of the large language models (LLMs) that power AI agents. Unpredictable outputs can ripple through connected systems, contaminating the controlled logic of traditional security components and amplifying the impact of any exploitation.

- Adaptive Exploitation. Attackers can manipulate a system over time by strategically injecting malign inputs that alter the agent's contextual understanding, ultimately influencing its behavior. Such “context poisoning” tactics are particularly insidious because they exploit the system’s learning processes, degrading its reliability gradually rather than through a single exploit.

“Trust Me”

Given these vulnerabilities, a core issue is the level of trust that companies place in these systems to make decisions in a reliable and secure way. Reliable means we can depend on the systems to behave as expected in any situation. Secure implies that they do so without causing harm to the individuals or organizations that deploy them.

For business leaders (as well as for agents themselves), trust is the critical enabler for moving from a proof of concept to a fully deployed agentic capability that delivers operational value. Achieving this trust requires a security-first mindset that addresses vulnerabilities without stifling innovation. Balancing innovation and security ensures that systems remain both functional and resilient, delivering value while protecting users and data. Three safeguards are especially important:

- Intent-Aware Input Validation. Systems should detect and block adversarial prompts by analyzing their intent and structure without restricting legitimate queries. Traditional rules-based analysis will not work; an agent trained for detection is probably the best solution.

- Robust API Security. All integrations require strong authentication mechanisms, such as OAuth 2.0, combined with thorough input sanitization. This reduces the risk of malicious queries propagating through interconnected systems. Applying zero-trust standards (never trust, always verify) and the principle of least privilege (users are granted minimum necessary access) is critical to ensuring that agents are properly authenticated and authorized to access the necessary system components.

- Real-Time Monitoring. Continuous behavior monitoring with anomaly detection helps identify patterns indicative of adversarial manipulation. Regular audits ensure that GenAI behavior remains aligned with intended objectives, with any drift or degradation promptly addressed.

Technological design is critical, of course. But equally important is the organization’s role in security. By embracing this responsibility, the organization can build systems that inspire trust and foster engagement. End users also play a pivotal role in maintaining GenAI security. Organizations should transparently communicate system limitations, data-handling practices, and security safeguards. Simple disclosures—such as information about encryption of sensitive data or the limitations of AI-generated recommendations—empower users, fostering responsible interaction and building trust.

A FAST Solution

BCG developed FAST to provide a clear roadmap for achieving trust in these tools by outlining the actions that will ensure security and reliability. FAST enables organizations to:

- Map out the capabilities they need.

- Define the maturity levels that each capability must reach to mitigate risks.

- Estimate the effort and resources required to operationalize AI agents with confidence.

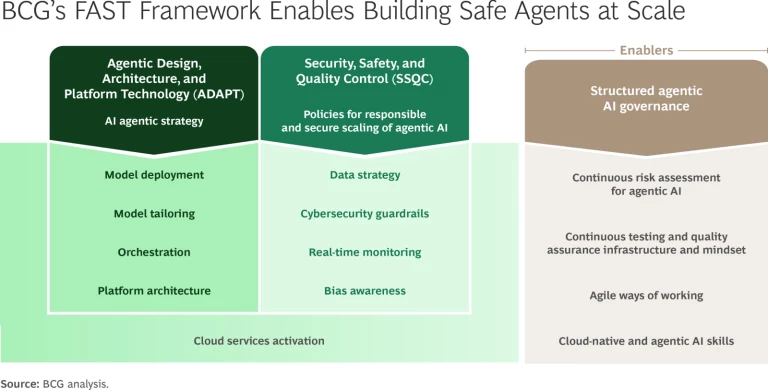

The FAST framework rests on two pillars. The first—agentic design, architecture, and platform technology (ADAPT)—involves technical and functional implementation and security. The second—security, safety, and quality control (SSQC)—ensures secure, compliant, and ethical operation. FAST also offers a set of best practices, or enablers, relevant to each pillar. (See the exhibit.)

Agentic Design, Architecture, and Platform Technology

ADAPT comprises six key capabilities that make up the technical backbone for implementing AI agents. They cover the entire agentic life cycle, from the strategic vision to the choice and adaptation of models to orchestrated and industrialized execution. All are closely linked to a corresponding set of SSQC capabilities, enabling the two pillars to work in concert.

Set the strategy. Companies need to supervise, manage, and industrialize the development of agents, which requires aligning business needs, technical architecture, security and safety requirements, and product life cycle. The first step is to establish a strategic vision and prioritized use cases, then define the required functional and technical architectures in the context of any security and regulatory constraints. This is a cross-functional effort that should include business stakeholders; the AI architect, cloud agent, and engineers; data owners and stewards; a project management office or product owner; IT operations; and agent security champions.

Deploy models. The purpose of this step is to choose, test, host, and maintain the models used by the AI solution. To identify and integrate suitable agent models, companies need a broad set of capabilities that enable them to:

- Analyze use cases and define the selection criteria.

- Evaluate and compare models using benchmarks, tests, and SSQC reviews.

- Choose hosting and access methods.

- Implement technical integration.

- Monitor performance (including “drift detection”) and sound alarms.

- Conduct incident reviews and ongoing compliance.

Tailor models. Most companies will want to customize models for specific use cases and improve performance with respect to desired functionalities. This requires multiple specific skills, including understanding best practices for efficiency, traceability, cost, and compliance; managing the behavioral, functional, and ethical validation tests of personalized models; ensuring complete documentation of the customization process; and guaranteeing the maintainability of customized models over time.

Orchestrate agents. This capability brings together an agent’s mechanisms to coordinate the interactions among components (such as models, tools, APIs, agents, and humans) and execute complex tasks in a fluid, secure, and traceable way. It allows companies to structure logical sequences, conditional decision making, and control points in multiagent systems. The key activities include design and modeling of the components, workflow, and control points (logs, supervision, human validation), technical integration, execution and supervision, and governance.

Build the platform architecture. Companies need to build a unified cloud-native architecture to streamline agent deployment. The architecture should incorporate stringent security, governance, and observability measures that encompass all the stack layers necessary for the operation of agents. The goal is to provide a unified technical platform so that companies can industrialize use cases, streamline development, ensure compliance with security and regulatory requirements, and continuously measure agent performance and impact.

Activate cloud services. This capability involves activating, governing, and supervising the cloud services necessary for the proper functioning of agents, ensuring that they are secure, compliant, and aligned with the other capabilities of the FAST framework. It covers AI services (such as LLMs, APIs, and vector databases), infrastructure, and monitoring, testing, mitigation, and logging services.

Security, Safety, and Quality Control

The SSQC pillar is rooted in preemptive risk identification and management for each of the ADAPT capabilities described above. It encompasses six capabilities.

Policies and Planning. This capability aims to develop structured, auditable security strategies, classifying risks and defining clear requirements aligned with regulatory standards. The goal is to manage security, safety, and functional and behavioral quality, as well as reputational risks, throughout the agent's life cycle. It is based on industry-specific critical software standards, structured requirements management, and mechanisms for qualification, verification, revalidation, and audit.

Data Strategy. Agents need access to often sensitive or critical internal and external data. They therefore also need a secure, traceable, and governed data strategy that covers data lineage, criticality classification, access, and consent policies. Preemptive risk identification based on threat models such as ATLAS, OWASP, LLM, SAIF, and others is key to guaranteeing the integrity, security, lawfulness, and usability of data for agents. This capability also defines the control and audit measures for the models, storage, and data flows implemented during agent projects.

Cybersecurity Guardrails. GenAI systems need their own set of cybersecurity safeguards, including models, data, prompts, APIs, interconnections, and behaviors, to protect their expanded attack surface. Fit-for-purpose safeguards can prevent known attacks while ensuring early detection of malicious or noncompliant uses. Based on zero-trust standards, this capability provides in-depth control adapted to the specific behaviors of agents. An integrated threat modeling approach enriches traditional failure mode and effects analysis and fault tree analysis by taking into account both compromise scenarios and deliberate exploitation of vulnerabilities in a structured manner.

Bias Awareness. Bias is problem for GenAI systems, including agents. A good antibias capability identifies discriminatory or stereotyping incidents or behaviors in agents, their input data, their models, and their interactions. It detects bias in patterns, prompts, and responses by implementing metrics and tests and defining the thresholds for acceptability. It also applies debiasing or mitigation methods. Bias awareness ensures the fairness, transparency, and nondiscrimination of agent systems and compliance with ethical and regulatory rules and societal expectations. It also promotes accuracy and reliability, which are essential for building trust that agents will act with consistency.

Real-Time Monitoring. Companies need to set up continuous, real-time monitoring of agents and their inputs, behaviors, and outputs, as well as interactions between users and the system. It’s essential to be able to detect anomalies, “drifts,” and behavioral or technical risks in operation and to manage the compliance of systems on a dynamic, real-time basis. BCG’s SSQC capabilities are designed to integrate quality management, cybersecurity, and AI governance directly into the organization's security ecosystem, interconnecting with existing security operations, detection and response capabilities, and incident management processes.

Cloud Services Activation. A center of excellence for cloud services serves several important functions:

- Activating the cloud services necessary for technical capabilities (such as LLM access and registries, retrieval-augmented generation, orchestration, and monitoring)

- Defining a strategy for the use of managed versus self-hosted, sovereign, or external services

- Integrating services in a governed manner into the existing IT environment

- Ensuring compatibility with SSQC infrastructure requirements

- Controlling costs, performance, availability, and reversibility of activated services

Implementing FAST

Companies today operate at various levels of maturity with respect to AI, GenAI, and agents. Moving forward with agents should start with gaining a clear understanding of where the company stands on the maturity curve and what the road ahead looks like. The FAST framework identifies four maturity levels across ADAPT and SSQC:

- Exploratory: basic prototyping without structured governance

- Experimental: limited deployments with preliminary integration and basic security measures

- Operational: fully integrated deployments with robust supervision and active risk management

- Strategic: enterprise-grade deployments characterized by active governance, automated monitoring, and comprehensive risk management, which are necessary in a system of autonomous agents that interact with one another to form an agentic organization

FAST provides a roadmap for moving up the maturity curve for each pillar, outlining the actions required to reach the desired levels of maturity and trust. Implementation planning and execution should involve cross-functional teams of stakeholders from the business, IT, security, and compliance. The team should deploy and validate in an iterative fashion (strong agile capabilities are a big help), gradually scaling up implementations and validating effectiveness at each stage through rigorous testing and feedback loops.

While FAST is designed to support individual projects, it can also be scaled to structure entire AI transformation initiatives. For instance, a “GenAI factory” within a large enterprise can use FAST to govern all agent-based development, ensuring consistency, risk control, and strategic alignment. In this broader application, the required capabilities are adjusted to the size and scope of the organization and deployed according to the criticality of each project. This structured, maturity-based approach allows enterprises to industrialize generative AI deployment while keeping trust at the core.

Agentic AI is rewriting the rules of enterprise operations. It can observe, decide, and act, turning organizations into augmented systems that bring together the best of human and machine. Such power commands respect and discipline. Only a demanding framework that integrates strategy, security, and trust can unlock its full value while containing the risks. FAST turns fear into focus and experimentation into execution, ensuring that agentic AI becomes not a liability but the next great competitive advantage. The future belongs to those who build it—safely.

The authors are grateful to their BCG colleague Djon Kleine for his insights and assistance.