For companies interested in rolling out Generative AI (GenAI) tools, experimenting with the technology is relatively easy due to the power of the large language foundational models, such as GPT-3 and GPT-4 from OpenAI, LLaMA from Meta, and PaLM2 from Google. But early success with a GenAI proof of concept can lead to a false sense that it will be easy to scale into an enterprise-ready product. In fact, executives are quickly learning that building GenAI products for scale introduces uncertainties and challenges far greater than with many other technologies.

One critical area that must be examined to realize the full potential of GenAI is the user experience (UX). In this article we delve into the intricacies of optimizing user experience by sharing a UX design framework and testing methodology.

Designing the User Experience

When a technology as powerful as GenAI emerges, leaders have to consider the many possible ways that people will need to interact with it. This is especially true when the tools take the form of a GenAI “agent” that possesses creative and proactive capabilities that can operate in the background on people’s behalf—everything from seeking out relevant facts to add to a discussion to conducting SME interviews on behalf of the team. With this in mind, we have developed a UX design framework to help organizations better integrate GenAI tools into their new and existing workflows. This framework can guide product designers as they think through the most likely ways people will interact with these systems.

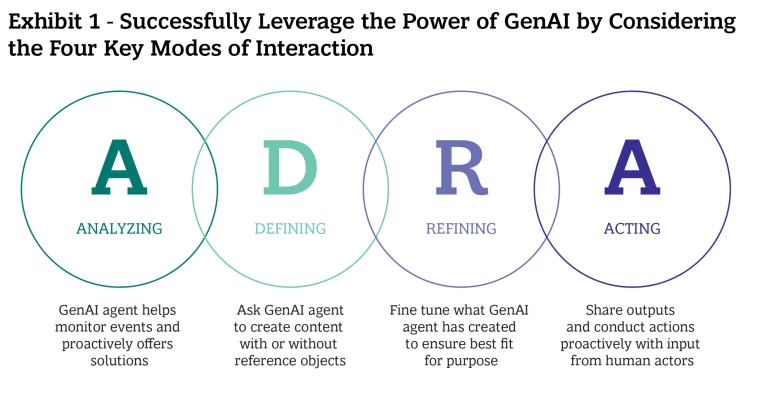

Our UX framework focuses on four distinct modes of interaction that people are likely to have with these technologies. We classify them as Analyzing, Defining, Refining, and Acting. (See Exhibit 1.)

Analyzing Content

Much of AI’s promise lies in its ability to eliminate mundane or repetitive tasks from workflows. “Always-on” GenAI agents are configurable to run in parallel with human activities, delivering useful augmentation in real time. For example, an assistant can listen in on team meetings and determine when there is an information gap or anticipate and suggest next-best actions. Always-on agents are already present in tools such as Microsoft Teams and Zoom for transcription and summarization and are quickly spreading into task-specific scenarios such as customer service contact centers.

In this mode of interaction, the UX considerations are about controlling what the AI is paying attention to—recording, analyzing, etc.—and making clear to users what it’s doing. Designing where, when, and how GenAI is used in an always-on mode should be done in direct consultation with the intended users and through a process of iterative training and trust-building.

Defining Intent

As opposed to the traditional button clicks of most user interfaces, engaging with GenAI tools often involves unstructured natural language text, verbal inputs, or even multi-modal inputs (a mix of voice and image, for example). These are new ways of interacting with machines—and while describing what someone wants in words may seem like a simple act, most users are not experienced in prompt engineering. Unbeknownst to them, the model can produce variable or seemingly inconsistent responses with slight word changes or model updates. Moreover, text input fields are not the fastest or most precise methods of interaction.

Since some users will struggle to understand the full capabilities of the GenAI tool or what it’s ideally built to accomplish, the learning curve for both the user and the tool should be fully considered. What is the onboarding experience? How can users progressively advance their confidence, speed-to-output, and overall quality? And how can the GenAI tools themselves learn about their users’ needs, interests, and behaviors to deliver better experiences? For example, augmenting chat interfaces with familiar interface controls, such as buttons or filters, could help reduce mistakes and enable users to guide the GenAI toward outcomes with a much higher chance of first-time success.

Refining Outputs

Even when user intent is clearly understood, the GenAI tool rarely delivers results perfectly with its first output. The process of iterating toward the correct answer or output is a fundamental part of the user–AI interaction—an interaction that becomes increasingly complex as the AI integrates with existing enterprise software and involves multiple users at the same time.

Another source of complexity is that GenAI outputs have different constituent parts. Consider a travel itinerary, analytics dashboard, or marketing video as GenAI outputs. Each contains different types of media combined in unique ways. While these outputs could all be displayed within the body of a text conversation, it’s awkward to refine them through conversation alone. Ideally, a user can select part of an output and refine it in isolation. Sometimes it’s most efficient to leverage existing UX elements to refine an image, particularly for familiar, simple, and repeatable tasks. Other times, natural language opens the door for complex and bespoke tasks, such as remixing an image in the style of the user’s favorite artist.

Acting and Following Up

As GenAI agent models move artificial intelligence from just delivering information to also taking actions, new possibilities and complexities emerge. GenAI agents conversing with other GenAI agents and APIs need to visually display their intentions to human users, but these interactions must be designed carefully.

For example, imagine that during a live meeting an AI agent identifies the need for certain data, then proactively does research and delivers that data to the team before the meeting ends. That might sound like a great way to help the team be more efficient in completing its task. But when are people actually comfortable allowing the AI to take these steps? How do they prevent it from constantly interrupting with suggestions while maximizing what it can do for the group? How do these questions change based on whether a team—or an individual team member—is interacting with the AI? Will confidence grow over time as the team trains the AI, requiring less intervention and allowing for more proactive background activity?

Early on, we expect that teams will want GenAI agents to deliver preliminary input that humans modify and approve before deploying. But as these agents develop a successful track record, it’s likely that proactive background activities without human intervention will be increasingly permitted.

Implementing a Testing Methodology

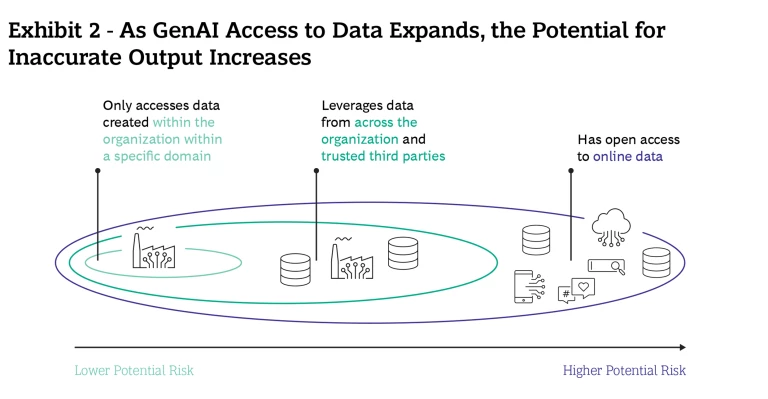

Although well-designed workflows are certainly possible, the unpredictable nature of GenAI is causing some organizations to hesitate in rolling out GenAI tools across the enterprise. As noted, for example, GenAI tools can give answers that vary by user and even by interaction. Moreover, this variability increases as the tools gain access to larger datasets outside the organization’s control. This increases the risks of misinformation and inaccurate responses. (See Exhibit 2.)

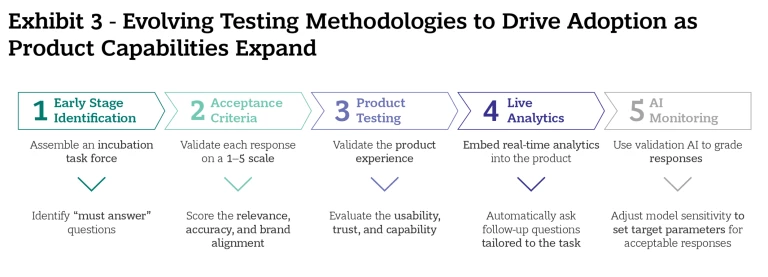

To ensure that a particular application of GenAI is effective and remains so, it’s important to continuously monitor and test the outputs using a robust methodology. BCG’s newly developed monitoring and testing methodology has five key activities designed specifically for GenAI tools. These methodologies are still developing as the technology rapidly advances, and companies may wish to modify some of these steps to meet their needs. That said, elements like these are highly recommended to de-risk exposure and improve the desirability of the tool. (See Exhibit 3.)

1. Early Stage Identification

We advise that GenAI product testing should begin with an incubation task force in a controlled internal environment before extending to a broader audience. During the incubation phase the goal is to validate the GenAI tool’s responses and, by extension, the data used to train the underlying model. This process includes identifying the crucial questions or tasks that the tool must answer or perform without producing inconsistent results, such as listing the sizes and colors available for a particular product. By clearly defining such “must answer” questions, a company can set a baseline level of accuracy required from the GenAI tool before putting it in the hands of users.

2. Acceptance Criteria

Once an internal task force is selected and a set of “must answer” questions created, it is time to begin validating the product. The product’s answers to questions are ranked using three criteria on a 1–5 scale:

- Relevance – Are responses within the product or brand’s area of expertise?

- Accuracy – Are responses correct and free from misinformation?

- Brand alignment – Do responses align with the brand’s style, tone, and values?

Scoring against these criteria provides robust data on the improvements needed to better satisfy each “must answer” question and mitigates the chances for hallucinations (nonsensical or outright false answers) and other inaccuracies. Once the tool achieves a satisfactory score (as determined by each company) for each task or question, the tool can move to a broader pilot.

During the pilot phase, it’s likely that the set of “must answer” questions and tasks has expanded to incorporate new capabilities based on user feedback during the incubation phase. Each task and question in the pilot is also scored on a scale of 1–5 based on relevance, accuracy, and brand alignment.

3. Product Testing

In addition to the acceptance criteria, it is critical to validate the product’s usability. In addition to traditional usability metrics, trust and capability are additional lenses to consider with GenAI.

- Usability – Was the product easy to use without expert guidance?

- Trust – Did you trust the information given to you?

- Capability – Did you understand what you could accomplish with the tool?

We recommend that product testing criteria are introduced in the late incubation or early pilot stages, when users are more likely to receive high-quality responses. Once the product achieves a high-enough score on both the acceptance criteria and the product testing metrics, the company can move the product from the pilot to “at-scale” testing.

4. Live Analytics To accurately assess the impact of a GenAI product as it scales from pilot to an enterprise-grade solution, the company needs to embed real-time analytics into the product. A simple example is the thumbs up/down after each response in ChatGPT. A more robust version would be if the GenAI product followed up with users by asking questions tailored to their sessions. For example, Did the instructions help you fix your server issue? These analytics gather rich qualitative and quantitative data on hyper-specific use cases at a scale usually unattainable with human research teams alone. This helps to ensure against a decline in the quality of responses and usability, while the product remains aligned with the acceptance criteria established in step 2.

5. AI Monitoring The final phase of the testing methodology is continuous monitoring and improvement to de-risk responses at an enterprise scale. To this end, companies can deploy other GenAI tools that act as synthetic users, grading the responses of the product using the acceptance and product testing criteria. This monitoring helps organizations assess if changes are needed to the data used to train the underlying model, thus adding an extra layer of quality control alongside user testing. Companies can also adjust the sensitivity of multiple validation models to set boundaries for highly acceptable and highly unacceptable responses. These findings can then be fed back into the product to refine its future responses and ensure a process of continuous improvement.

Mastering the User Experience

Moving from a GenAI proof of concept to a full-scale implementation is no easy task. It requires that a company addresses a range of key processes—including understanding the implications for people and organizational processes—and manages the accompanying changes. Our framework for designing the user experience and our methodology for testing GenAI products can help executives navigate these complexities with precision and create a truly differentiated experience for customers and employees alike.