Here’s a paradox: over the past decade, the pharmaceutical industry has repeatedly delivered transformative technological and scientific breakthroughs, including metabolic modulators, mRNA vaccines, and CRISPR gene-editing platforms. Yet biopharma companies have also experienced a steady decline in the output of their internal drug discovery engines. Compounding the problem, rising revenue pressures from the coming “patent cliff” and other factors could reduce the capital available for reinvestment in innovation, the source of future growth and value.

Biopharma companies face a pivotal strategic challenge: how to structure the research function for more and better results, including complementing internal research capabilities with external innovation to maximize sustained output and success. Striking the right balance requires tailoring strategies by area of biology and disease as well as by technology or platform. Companies also need an operating model that eliminates biases, enables objective, data-driven decisions on internal versus external investments, and adapts as new science, technologies, or market dynamics emerge.

There is no single right answer. Future winners will design a fit-for-purpose model that meets their particular needs, combining the best attributes of big and small pharma, biotech, venture capital, and tech company approaches.

There is no single right answer. Future winners will design a fit-for-purpose model that meets their particular needs.

Not Invented Here

Over the past 20 years, many major pharma companies have increased their reliance on external sources of innovation rather than grow internal resources. In 2003, 17 of the 20 best-selling drugs originated at large biopharma companies. By 2023, that number had dropped to seven. Today, nearly 65% of the total industry pipeline comes from biotech companies.

Large pharma’s current approach leverages Joy’s Law (attributed to Sun Microsystems co-founder Bill Joy): no matter who you are, most of the smartest people and best ideas are found outside your organization. But here’s the rub. The shift to more external innovation sourcing has not correlated with higher R&D productivity, which has been on a steady downward trend for decades. The reason for this is rooted in what’s referred to as Eroom’s Law (or Moore’s Law in reverse): the cost of developing new drugs will rise exponentially despite advances in technology.

Pinpointing the actual sources of rising costs is a challenge, since they are the result of a complex interplay of various functions—such as research, clinical development, and regulatory affairs—a process that produces breakthrough successes or spectacular failures. The most significant single cost in biopharma R&D is still the cost of failure, and end-to-end success rates in drug development hover around 10%. Late-stage clinical studies incur the most R&D spending, so R&D productivity takes the biggest hit when drugs fail in late-stage studies. The uncomfortable truth is that many failed drugs should never have advanced to the clinical trial stage in the first place.

The most significant single cost in biopharma R&D is still the cost of failure, and end-to-end success rates in drug development hover around 10%.

Rising Costs; Higher Stakes

While costs vary across therapeutic areas (TAs) and modalities, the cost of bringing an asset to clinical trial today ranges from $150 million to $300 million—as much as double the cost a decade ago. At the same time, intensified competition for innovative assets has pushed up the average premiums paid in acquisition and licensing deals from 50% in 2021 to 77% in 2023. Two principal value destroyers—poor decisions and fragmentation of effort—carry a bigger price tag than ever before.

Poor decisions undermine productivity. A hallmark of high-performing research organizations is that they have more good ideas than money to spend developing them. The best functions also become adept at deprioritizing very good programs in favor of even better ones, licensing out the former (having built the team and processes to do so). Our research has shown that indicators of scientific acumen and good judgment correlate with success or failure in drug development. This is especially important in a time when many companies set up multiple “shots on goal,” pursuing various modalities against the same target.

But there are plenty of impediments. Companies have a hard time embracing and celebrating failure. Decision making and governance are not as rigorous as they should be. Behavioral factors come into play. (See “How Human Psychology Stands in the Way of Objective Decision Making.”) The result is that too many programs remain in the research funnel for too long, incurring wasted costs and slowing down potential winners.

How Human Psychology Stands in the Way of Objective Decision Making

Back in 2016, we described the organizational dynamics and corporate incentives that often amplify the problem by rewarding progress-seeking behaviors and impairing truth-seeking behaviors. For example, in a race to be first to market, companies can become overly focused on quantity metrics, which encourage leaders to push as many assets as possible through the pipeline. This leads to portfolios bloated with suboptimal assets and resources that are spread too thin, further undercutting the most promising opportunities.

The drug development timeline also plays a role. The ultimate proof of whether a scientific idea was a useful one is an approved drug entering the market, and this takes many years. It is not practicable to tie incentives to outcomes that far in the future. Individuals (and entire R&D functions) tend to look to more immediate milestones as the basis for rewards and promotions and worry less about end-to-end outcomes.

Stay ahead with BCG insights on the health care industry

Fragmentation: Jack of All Trades, Master of None. We have found that companies that focus on fewer TAs and modalities have greater odds of achieving success. They build deeper, more differentiated knowledge and capabilities, and that helps them identify and invest in the highest-impact opportunities and establish innovation flywheels. They are also seen as more credible partners by biotech innovators in their chosen areas of expertise.

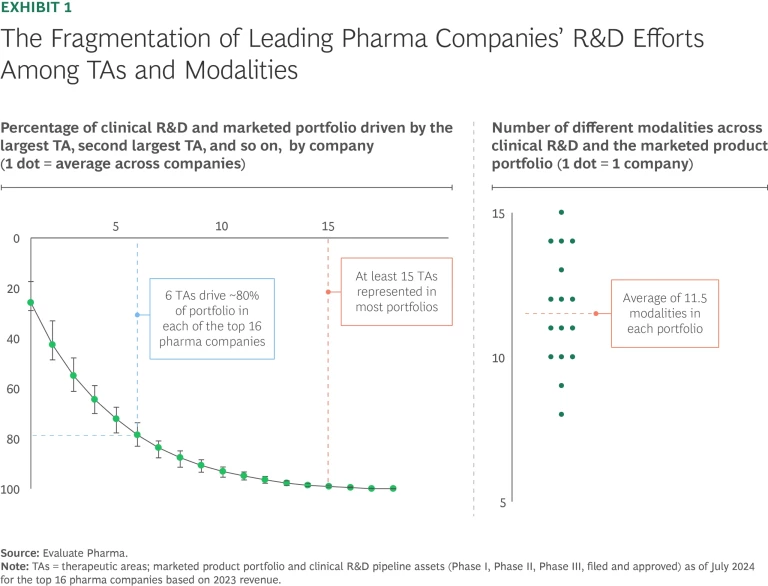

The evidence, however, shows that maintaining tight focus is a challenge in the industry today. Only six TAs made up roughly 80% of the assets of the portfolios of the largest biopharma companies in 2024, but most companies invested in 15 or more TAs overall. With the proliferation of “modality agnostic” R&D, biopharma companies also pursued an average of 11.5 modalities across their portfolios. (See Exhibit 1.) Worse, in our experience, most biopharma pipelines are headed for even less focus in the next few years. Efforts to streamline R&D are not going far enough, and the surge of exciting new technologies means the choice of what to emphasize will become even more difficult in the future.

Designing a New Model

There is no precedent or benchmark for an optimal research model. Each of the models followed by different types of companies—large pharma, smaller pharma, biotech, venture backed, and tech focused—has its strengths and limitations. Some are complementary; large pharma provides an off-ramp for successful venture-backed firms that have promising assets, for example.

Large pharma needs to imagine a next-generation research model that combines the best of existing models to meet each company’s individual needs. Such an approach would encompass the following attributes:

- The strategic focus of successful small to midsize firms that emphasize areas of differentiation (in TAs, biological pathways, and technology)

- The agility and rigorous focus of biotech companies on one or a few “killer” experiments that address key scientific or technical hurdles early on

- The deep functional capabilities, institutional knowledge and experience, and scalability of large pharma

- The data-driven, unbiased approach to decision making and portfolio management skills of venture capital

- The innovation-sourcing muscle of players that have historically relied heavily on external innovation. (See “How Forest Labs Achieved Pharma’s Highest R&D Productivity.”)

- The technology-first philosophy of tech companies and “AI-first” biotech companies that leverage the power of AI-enabled workflows and strategic partnerships to access enabling technologies and AI

How Forest Labs Achieved Pharma’s Highest R&D Productivity

While its per-unit costs were in line with its peers, Forest outperformed through its low failure rate, which maximized output and minimized expense. Among the tactics employed were the following:

- Clear strategic choices and strong focus (such as on the US market and on development)

- Strong leadership and talent at all levels

- Strong business development capabilities and good judgment combined with a simple governance model allowing for fast decisions

- Rapid in-licensing decisions, often beating other players to attractive in-licensing opportunities through speed and agility

- Differentiated capabilities (in pharmaceutical sciences and chemistry, manufacturing, and controls, for example) and intentional operating model choices

- Flawless execution, cementing a reputation as a “partner of choice”

It would be challenging to replicate the Forest model in today’s market environment, where external resources have become the industry’s primary source of innovation and the competition for attractive in-licensing opportunities has grown much fiercer. However, the factors underpinning its success still apply and should be considered when setting up next-generation innovation models.

In our view, the following five factors will be hallmarks of this next-generation research model:

- A clear understanding of how to create value

- Fit-for-purpose internal teams

- Knowing when to build and when to outsource

- Strong governance, processes, and incentives that spur data-driven and unbiased decisions

- Augmentation through AI

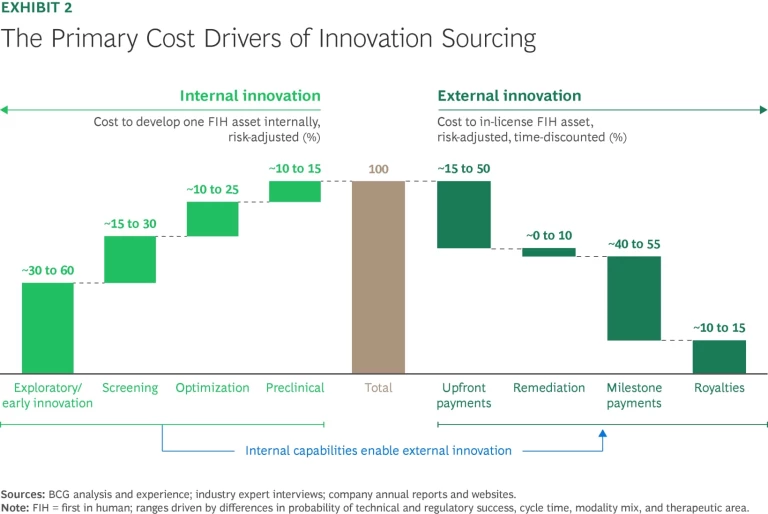

A Clear Understanding of How to Create Value. Internal versus external research: no strategy is inherently better than the other. In fact, we have found that when the full costs of acquisition are included, the expense of in-licensing a trial-ready asset is roughly comparable to that of developing the asset internally. (These costs include the risk-adjusted, time-discounted acquisition value plus costs of remediation, such as filling gaps in the data package.) (See Exhibit 2.)

That’s not to say that a company can’t gain an advantage through either internal and external innovation efforts (or destroy value when executing poorly). We simply haven’t found evidence that externally weighted models correlate with higher R&D productivity. (See “The Case Against Fully Externalized Research.”)

The Case Against Fully Externalized Research

- The biotech industry is marked by volatility and is heavily influenced by shifts in investor sentiment, interest rates, and labor markets.

- External assets aren’t readily available across areas of biology and technologies.

- High deal premiums can be a barrier.

- Successfully leveraging external innovation requires a certain minimum level of internal expertise, experience, and capability, from supporting searches and evaluations to being able to assess the value of potential assets.

- Prior attempts at fully externalized models have failed.

Repeatable success is rooted in the balanced interplay of internal and external programs. What this collaboration looks like in each TA or area of biology should be determined by a clear understanding of where to play and whether internal or external efforts are likely to create more value. For example, one company may decide not to engage until there is a validated target or lead candidate to work on. Others may engage selectively in internal exploratory research, which should require a clear rationale (such as unique internal IP stemming from adjacent efforts) for not relying on academic laboratories and early-stage biotech companies instead.

Repeatable success is rooted in the balanced interplay of internal and external research programs.

Regardless of which route they take, companies need to make a clear choice, then strive for excellence. For example, being the best at external innovation sourcing requires creating an information advantage by leveraging novel sources of data and insights, experimenting with new models to fund innovation and share risk, and moving fast to seize attractive opportunities.

Fit-for-Purpose Internal Teams. Many research organizations have standing cross-functional teams in designated areas of biology, disease, or modality. Companies complement these internal groups with academic or biotech partnerships, venture investment arms, innovation labs, and accelerators and incubators.

This can be an effective setup in areas where a company aspires to build expertise and experience over long time horizons (say, ten years or more) and expects to be able to engage in “industrialized research”—pursuing a continuous flow of targets with low to moderate risk profiles. It is not the only—or always the best—structure, however. For targeted high-risk, high-reward programs, for example, “time boxed” research units that are funded for two to three years and given clear milestones can be a more effective alternative. Companies can also designate “moonshot” units with longer funding horizons (five to ten years) to pursue high-risk, high-reward areas.

For some programs, it’s necessary to go outside the walls of the company, such as leveraging M&A to add required expertise and capabilities or setting up a new entity that isn’t constrained by established career development paths and compensation frameworks. New subsidiaries or spinoffs can facilitate attracting external capital for high-risk programs. Such models must be paired with purpose-built approaches to talent attraction, development, and retention. For example, team members in time-boxed units should have their own attractive development paths that factor in the possibility of their unit being dissolved. It’s important in a multi-path research function to avoid “class distinctions,” such as between standing internal groups and moonshot units.

Knowing When to Build and When to Outsource. Companies need to think carefully about the internal capabilities they build versus the ones they can access externally. The tendency is to tap internal resources first, and there are good reasons why a research organization may choose to maintain its own functional capability. These include high external costs, internal differentiation as a source of competitive advantage, high utilization and scale leading to the same or lower unit cost after adjusting for outsourcing premiums, industry credibility, and lack of good external partners (especially in emerging fields, such as gene therapy).

That said, today’s research organizations can source most functional capabilities from external partners, and these companies’ experience curves and economies of scale are often superior to what any single research organization can achieve on its own. Tapping external resources may make particular sense when the platform or technology is needed only for a specific asset.

Strong Governance, Processes, and Incentives. If poor decisions undermine productivity, good decisions drive value. Having the right governance, processes, and incentives in place ensures that research organizations rely on data and objective analysis, helping them overcome bias and behavioral barriers to rigorously prioritize opportunities and reach the best outcomes.

Better decision making starts with asking the right questions and linking the answers to milestones that test critical aspects of a drug’s potential, such as efficacy and safety. Each milestone should be tied to clear budgetary factors and timelines. Since about 90% of research programs ultimately fail, the goal is to gauge the likelihood of failure as early as possible and pull the plug. This is not an easy task, since it runs counter to the human instinct to strive for success.

Effective governance enables better decisions on two levels. At the research program level, a thorough review of scientific and technical merit determines whether the program has met previously defined milestones and assesses the risks and benefits of continuing. At the portfolio level, a clear definition of a particular program’s role determines how it stacks up against other initiatives on the bases of value, cost, and probability of success. This allows for an assessment of whether capital should be allocated for further pursuit or moved to other opportunities. Portfolio-level governance should also consider the level of complexity and fragmentation that individual programs add and the impact on the portfolio’s overall risk-reward balance.

An effective governance and process framework helps clearly delineate the roles, responsibilities, and decision rights of the relevant players. These include the head of research or R&D, the head of the research unit involved, the program or project leads, the functional capability heads, departments outside of research (such as development, commercial, and business development), external advisors, and finance. It should be clear who owns the ultimate decision rights on continuing or stopping a program and whether functional capability heads play a consultative role or are accountable for following through.

Incentive and compensation frameworks should be reviewed through an organizational effectiveness lens to make sure they are driving the desired individual behaviors. For example, is the organization rewarding volume (such as the number of investigational new drug applications) or volume and value (the number of high-quality assets entering trials with validated potential for successful late development and commercialization)? Quick “kills” should be rewarded.

Augmentation Through AI. AI-enabled workflows can reduce the time it takes to reach a preclinical candidate by 30% to 50% and lower costs by up to 50%, transforming critical stages of drug discovery. These efficiencies are realized through targeted improvements across the drug discovery and design pipeline. For example, BCG recently partnered with Merck to leverage AI and generative AI to mine omics data to identify novel drug targets for chronic and degenerative diseases.

Companies are also using AI to predict how changes in structure can affect drug characteristics such as solubility and bioavailability. Further downstream, biopharma researchers are using AI to predict drug behavior (assessing potential efficacy and toxicity) in preclinical models.

Our first analysis of the success of AI-discovered drugs in clinical trials suggests that AI-discovered molecules had an 80% to 90% success rate in Phase 1 studies, substantially higher than historic averages. While the analyzed sample size is too small to draw strong conclusions, and we will need to see how these molecules will fare in later stages of development, this is an encouraging observation.

More broadly, AI is being used across the portfolio to enhance decision making and optimize resource allocation through models that predict an asset’s likelihood of success. An increasing number of biopharma companies are building AI-driven drug discovery capabilities through acquisitions or collaborations.

To boost productivity, biopharma needs to rethink the research function. Companies should objectively assess where they can create value and bring the optimal combination of internal and external expertise to bear using advanced technology and tools. But perhaps most important, they must develop the muscles of discipline and focus and exercise them ruthlessly at each stage of the research value chain.