By:

Tom Berg, Clara Zhang, Arun Ravindran, David Potere, Sam Solovy, An Nguyen, Jason Hickner: BCG X AI Science Institute

Olivia Alexander, Ata Akbari Asanjan, David Bell: USRA Research Institute for Advanced Computer Science

Early in 2024, the Universities Space Research Association (USRA) and BCG X approached the National Aeronautics and Space Administration (NASA) with a proposal that it join the two organizations on a mission with broad environmental and social implications . Natural disasters—such as extreme rain, hurricanes, and severe flooding—have devastated communities across the world. USRA and BCG X believed that if data scientists and engineers from the three organizations could be assembled to leverage operational data from Geostationary Operational Environmental Satellites (GOES) and Generative Artificial Intelligence ( Gen AI ), they could develop a foundation model to help scientists better understand and predict extreme weather.

With the team in place, BCG X and USRA’s Research Institute for Advanced Computer Science (RIACS) worked rapidly to implement " AI at Scale " using the National Research Platform (NRP) , focusing on establishing new research capabilities and leveraging the latest developments in AI. Less than a year later, the team developed the new geospatial foundation models — and a new compute orchestration framework enabling the creation of AI from more than 25 years of satellite data.

Harnessing the power of the NRP

One of the team’s first tasks was to build upon USRA’s unique partnership with the NRP and its cluster of globally distributed computers to make a generalized geospatial foundation model pipeline that runs on Kubernetes clusters. The team used commodity hardware distributed across the nation in the NRP’s datacenters, while ensuring the solution would be portable to services such as AWS or Azure. The result has been so successful that the team looks forward to open sourcing the solution, showcasing the technology it used to orchestrate the computer cluster, and making its high-performance computing solution available and accessible to more BCG and USRA clients. As a next step, the team is working to build upon this success to perform similar AI at Scale geospatial foundation model training on the NASA Advanced Supercomputing Division’s GH200 superchip cluster.

Cloud-temperature project

With the NRP cluster in place, USRA data scientists then developed the geospatial foundation model algorithms for use with data from GOES, one of the largest, longest-running, and highest-quality geostationary satellite clusters, which provides data on atmospheric conditions. The large dataset encompasses atmospheric data, including top-of-the-cloud data. Top-of-the cloud data is comprised of the highest temperature in the atmosphere, which is usually at the top of the clouds or, if there are no clouds, at the earth’s surface. The dataset is highly correlated to a range of specific weather phenomena.

The immediate goal of the cloud-temperature project was to make a flexible model-training system that could provide the experiments necessary to prove out a foundation model. With a trained foundation model, the team could then apply the foundation model to understand weather systems. The next step was to build the foundation model itself.

Building a generalized accessible solution

Typically, when foundation models are built, teams will focus on the application of one specific model architecture. In this case, the team surveyed a wide array of imagery models (AFNO, SFNO, MAE, DINO, DiT, U-Net, ViT) and tested for speed, memory consumption, and hyperparameter flexibility. The orchestration framework is designed to readily support any of these models for a wide range of data sets, and for the initial training a novel approach developed by USRA that balances attention to local features in satellite data using MAE with attention to global features using DINO was used for to train an initial set of geospatial foundation models.

Graphics Processing Units (GPUs) used over time, showing dynamic allocation

The idea of cluster management (coordinating multiple computer nodes together) encompasses multiple engineering challenges. Many cluster management systems require reserving or provisioning resources beyond the start and stop times of the training jobs. This results in a costly “cold GPU” stage where GPUs are allocated but not utilized. To achieve the goals of the cloud-temperature project, the team designed and built a custom node-dispatching system that could dynamically allocate any number of nodes directly at training start time.

On startup, nodes dynamically determine node rank and configure network access to each other, enabling multi-node parallel processing without pre-reserving GPU resources. Using the NRP resources available, a typical training run might include 2 to 6 nodes with 8 GPUs each. A milestone run used 11 nodes with a total of 88 GPUs. The dispatching system can scale effectively to as many GPUs as a team is able to provision, and is based on Microsoft DeepSpeed, which can distribute training tasks to thousands of GPUs.

Also key to creating a generalized solution was delivering data to the National Research Program’s platform of distributed computers. Computers connected to the NRP cluster utilize a diverse range of CPUs, GPUs, and network cards. There are modern high-performance models designed for AI and deep learning, such as NVIDIA A100s, A10s, and L40s, and older GPUs like the 1080. While all the machines are in a shared network, the bandwidth available to each machine could vary. The attached storage on each node also varied, and none of the nodes can natively host 50Tb datasets at high speed. The challenge the team faced was in managing transfer of data from preprocessed storage throughout the network to the compute nodes and into the GPUs. This required data parallelism, custom caching, and careful tuning of network configurations.

A third key aspect of distributed model training is memory management in CPU and GPU space. The team implemented Microsoft DeepSpeed’s 3D parallelism to explore memory management. We tested Tensor slicing, pipeline parallelism, and data parallelism, as well as DeepSpeed’s ZeRO-Offload and ZeRO-Infinity for virtualized scaling of memory space. For most experiments, DeepSpeed Stage 2 provided sufficient Fully Sharded Data Parallelism (FSDP). An implementation of DeepSpeed’s sequence parallelism optimizations Ulysses unlocked the ability to experiment with extremely long transformer sequences.

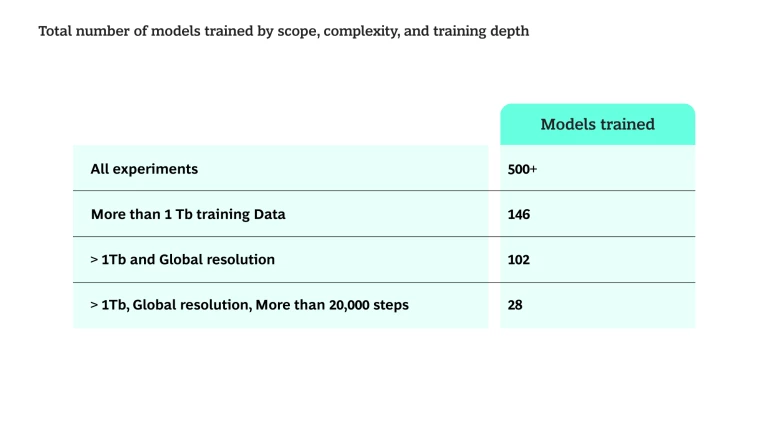

Scoping the dimensions of training

The team’s goal was to achieve horizontal scaling in several dimensional capabilities. As the engineering team built out capabilities, the data science team faced fewer constraints and could create more ambitious experiments. Methodically, the team would propose a solution, then test the limits of that the solution in terms of what it could provide as a capability and then, basically, build out the specific solution into a generalized solution. Each experiment required the team to scope compute requirements, including how much data, GPU power, and memory space would be needed for training. The team had to understand how it could look at and move terabytes of data — how it could run the job on slices of that data without having to copy and pre-process the data every single time.

Very large, full-resolution data access

The GOES satellite imagery is very large: 3298 by 9896 pixels, with 16 channels available for 7 years and most of the 16 channels available for 20+ years. A 15-year selection of a single channel is 17TB, providing 263,000 images at 30-minute intervals. Most other foundation model teams curate large datasets down to 2 to 8 Tb. They would downsample the data significantly in order to create a preprocessed streaming file that can be copied locally to the training nodes.

The team wanted to prove that it could perform a full-resolution training run covering 25 years of data. The team’s vision was to enable weather scientists to be able to configure training runs with any ad-hoc channel, latitude and longitude, and timespan. The result of this is a highly flexible dataset selector that provides just-in-time pre-processing, composable datasets, and the ability to test on small datasets and quickly move to larger configurations. Training nodes only use local storage for cache, which can be pre-filled from previous runs.

Data pipeline and lazy loading

- The raw satellite data (cd4 files) was preprocessed into float16 zarr archives, organized monthly, and stored on S3.

- Nodes read metadata to locate specific files, then lazy-load the data via an indexed map. Technologies including xarray, zarr, Dask, and PyTorch Distributed orchestrate the flow of data.

- Data is copied exactly once per training run to a custom data-parallel DistributedSampler. After an initial shuffle, the dataset is trimmed for uniform distribution across GPUs, ensuring each GPU sees the same dataset each epoch.

- Forward-pass results are locally cached after first compute to eliminate repeated costs.

Like clouds themselves, infinite possibilities

Building on these engineering achievements, the data science team has been exploring several key capabilities and use cases of foundation models. Notably, we are developing gap-filling and precipitation detection techniques. Our gap-filling capability can restore missing pixels in raw satellite data, enabling the reconstruction of complete images. In parallel, we're working on precipitation detection methods that blend top-of-the-cloud temperature data to refine rainfall measurements—helping us better identify and track rain and snowfall across the globe.

Beyond precipitation, the team is also investigating additional weather-related tasks such as tropical cyclone detection, as well as non-weather applications like super-resolution, where we generate higher-fidelity images by enhancing resolution beyond the original satellite capture.

Each of these initiatives builds upon the same generalized pipeline, reinforcing the framework’s adaptability and extensibility. With the data stored as zarr archives, new monthly datasets can be seamlessly integrated without major workflow modifications, ensuring continuous improvement and expansion of the system’s capabilities.

Current satellite systems worth trillions of dollars provide weather forecasting information but have gaps and coverage problems. The foundation models our team built can use AI to fill those gaps and provide more complete data sets. The general solution developed in this collaboration could use other data sets and compute environments, including private data or data from entirely different domains. For example, the data sets could relate to ocean temperatures and be used to investigate fish health or aquatic oxygen levels. Imagine the capability to apply this solution to air travel, training the model only on weather surrounding specific airplane flight paths. Capabilities like this are well within reach.

To reach the immediate goal of the cloud-temperature project, our team used only one of 16 GOES data channels. The possibilities are almost limitless for other organizations to take advantage of our open-source solution, node-dispatching system, and foundation model research. Weather Scientists now have new tools to learn more about the underlying physical systems that govern life on planet Earth.

Conclusion

By blending satellite data, the USRA RIACS data science and Earth science expertise, and BCG X AI Science Institute’s engineering and data science expertise, this project establishes a foundation model that can accelerate extreme weather research and broader climate analytics. The custom node dispatching system, dynamic pre-processing approach, and model collection have collectively delivered a scalable, powerful framework that can be replicated and adapted by teams within and beyond the three partner organizations. Our foundation models are openly available on Hugging Face .

The team developed an orchestration framework used for distributed training on the National Research Platform (NRP), a National Science Foundation (NSF) funded network of high-performance University resources across the United States. This orchestration framework opens the door to commercial applications—from aviation safety to fisheries management—and stands as a milestone in AI at Scale innovation.

Acknowledgements

This work used resources available through the USRA Research Institute for Advanced Computer Science (RIACS) and the National Research Platform (NRP) at the University of California, San Diego. NRP has been developed, and is supported in part, by funding from National Science Foundation, from awards 1730158, 1540112, 1541349, 1826967, 2112167, 2100237, and 2120019, as well as additional funding from community partners.

Disclaimer: This document has been written with an engineering-focused audience in mind, describing in technical detail an overview of deploying AI at large scale using HPC and distributed computing frameworks.