Foreword

Harnessing ever-increasing amounts of data continues to be one of the most formidable challenges facing companies embarking on Integrated Business Planning (IBP) transformations. To do so effectively, companies must understand the major characteristics of large data sets and the complex process necessary to gain sufficient insight and tangible benefits from the data. We will delve into the factors that make mastering data so difficult and provide examples and guidance on how to overcome them.

Major characteristics of IBP data

In a perfect world, the data needed to effectuate integrated business planning would be perfectly accurate, real time, all-encompassing, and infinitely granular. But it is more realistic to think of each characteristic in practical terms. Make sure your data is good and that you have data governance in place, then continually improve the overall characteristics of your data through incremental analytics and data scrubbing. Let’s look more closely at each of these attributes.

Accuracy: Of course, perfect data would be ideal. There can be strong compounding effects of using unreliable or incorrect data, such as the degradation of the signal for any type of process model. But when investing in data quality, the law of diminishing returns can quickly come into play. What is more important is to reach a point at which your data is within the margin of error, then focus on knowing where the gaps and weaknesses are and designing for resilience.

Real-Time: Similarly, the cost and complexity of achieving a real-time data infrastructure must be weighed against the incremental gain in the accuracy and agility of your planning. In most cases, data exchange across systems is best when optimized for decision rhythms. For example, sending a real-time feed of demand-forecast data for trade budgeting may be of little value when trade budget decisions are reviewed only a few times a month.

All-Encompassing: IBP requires a great deal of both internal data -- and external data such as consumption or third-party data, or information about your competition. And, yes, again, having all this data at your fingertips would be... ideal. But data, especially external data, can be expensive, hard to clean, and even harder to maintain. A more practical approach is to start with data you can control -- your internal data – and then expand your data universe from there.

Granular: The key for this characteristic is to create planning systems that are flexible enough to be able to aggregate or disaggregate the granularity of the data to match the needs of those using it. Sales teams, for example, may need a great deal of granularity, perhaps down to the individual skew or item level, while supply chain teams can use data with much less granularity. To achieve this level of flexibility between systems, you must clearly define both your data consistency and your data structures.

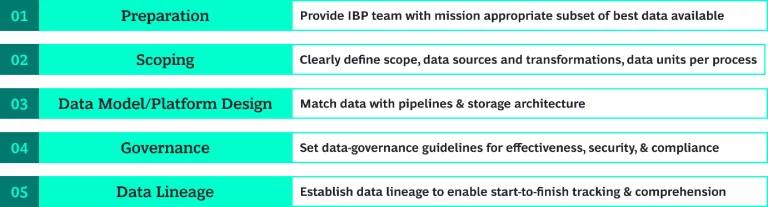

Overview: Five steps to data transformation

To truly gain insight from the information and provide tangible benefits in the decision-making process, the data transformation must holistically encompass the above-mentioned aspects. To accomplish the transformation, companies must take five necessary steps.

On closer examination

1. Preparation: Most legacy organizations will have multiple systems and data sources. They will also have processes that have been built upon those systems and dependencies for normalizing the data, which can lead to numbers being defined by who is looking at them. As such, sufficient effort must be focused on:

- Disentangling these processes

- Formalizing source contracts, collection, and data-refresh cadences

- Building out relevant infrastructure and data pipelines

- Building out people systems and processes to manage the pipelines

- Continuous monitoring and enhancements to ensure that the technology evolves as the data size increases

Preparation is perhaps the most time-intensive undertaking and is best done in a staggered manner that allows for quicker results while also maintaining cohesion between the different services.

For a large retail client, our BCG X team upgraded the ERP system in place, built out an enterprise data platform and created downstream data layers that provided a more cohesive view of the organizations data, increased visibility, and also led to sharp increase in use-cases for the data.

2. Scoping: This is an often-neglected aspect of a build that quickly comes to the fore when problems in measurement materialize. It is important to formally acknowledge the data scopes through written records and advertise them broadly to keep everyone on the same page. Potential data hazards include:

- Data on shipments that are tracked as actualized (remove returns or unfulfilled orders) or invoiced

- Multiple product identification standards or shipments may have multiple units of measure

- Data residing in different sources such as orders placed in SAP but actualized shipments tracked in Kinaxis.

- Data categorization across multiple systems, such as customer orders recorded in SAP but product hierarchy created in Kinaxis.

- Forecasting performed for a subset of customers, such as retail

- Data not refreshed at the cadence that forecasting requires

- SMEs that have incorrect definitions of data attributes and their usage

3. Data Model & Platform design: The data platform is a layer of fabric connecting multiple sources rather than a single component. Since the underlying storage solutions and their number change as technology advances, an abstraction must be in place to protect the application code from the changes. This can be a custom interface or a utility existing for a given language. (For example, python has fsspec (Filesystem interfaces for Python), an interface for interacting with different storage solutions).

“Data model” refers to structure and relationships between the various data attributes that drive IBP. As described above, this data comes from disparate operational and functional sources. The relationships between them, therefore, must be formed on the basis of the usage and where the data is shown.

For a large b2b client, our team created an internal planning application that required separating data structures based on two distinct patterns of viewing the data: high granularity charts and graphs that required high level of detail in the data, and low granularity ones that showed trends over longer time horizon.

4. Governance: In the case of IBP applications, data governance typically encompasses the following:

Role Base Access Control (RBAC): According to this model, all interactions on the applications are attributed to clearly defined user personas and each persona can be assigned to one or more users. These personas govern the rights attached to a given UI component, such as “read,” “edit,” or “delete.” In this way, access controls are put in place using the persona/role. Usually, RBAC is integrated with an organization’s IT-maintained directory services, most common being Microsoft Active Directory

ABAC: In this governance model, data controls affect what information one sees, while the personas govern how one interacts with the application. A user is assigned a specific set of attributes that act as filters and are passed down from the UI. These attributes are then applied at the source level (database, cache, static files) and the information is then returned to the application layer. Various applications might implement this differently, but ABAC is usually implemented at the application level itself for off-the-shelf solutions.

5. Data Lineage: Data lineage involves capturing and documenting information about where the data originated, how it underwent transformations and the various steps it went through as it moved across systems, applications and processes. Data lineage provides organizations with an understanding of how data is created, manipulated, and utilized within their systems. It helps address questions such as:

- Where did the data originate from?

- Who accessed or modified it?

- Where was it stored?

- How was it used or analyzed?

For a Large client, the team built a custom ground-up automated tracer as the data came from disparate sources both on-prem and in cloud. The tracer is designed to store upstream and downstream meta information according to the storage type (parquet, relational etc.). This information could then be used to construct the entire flow of data in different formats and at different levels.

The promise of IBP lies in its ability to bring together people, processes, and data in a seamless, responsive ecosystem. The road to realizing this promise is not just about technology—it’s about the discipline to manage complexity, the foresight to build scalable foundations, and the patience to navigate the messy realities of legacy systems and fragmented data landscapes. Organizations that succeed in IBP transformation do so by approaching data transformation deliberately: with clear scoping, robust design, thoughtful governance, and a deep respect for the lifecycle and lineage of information.

Transformation, by its nature, is a journey. And data, when treated as a strategic asset and not just a byproduct of operations, can become one of the most powerful levers for change.