When it comes to finding responsible ways to develop and deploy AI, the stakes couldn’t be higher—particularly in light of the generative AI revolution. This is true for individuals, for society, and for the organizations that drive the use of AI technologies in our world today.

Organizations in a multitude of industries are realizing huge benefits from AI—benefits that are amplified when AI systems are developed responsibly. Organizations that lead in responsible AI build better products and services, experience accelerated innovation, and decrease the frequency and severity of system failures.

Even so, it’s not unreasonable to have reservations about AI. Workers fear replacement; consumers, a loss of privacy. Even business leaders are wary of the speed at which tools such as

generative AI

are developing. They remain uncertain about how these technologies will affect their organizations, and they wonder:

How do we weigh the risks posed by AI, against the risk of falling behind?

The risks of AI are indeed plentiful. Researchers have found racial, gender, and socioeconomic biases in multiple hiring and health care algorithms. AI system lapses have produced racial bias in image processing, faulty or inappropriate product recommendations, and gender bias in credit offers. And generative AI systems, however impressive their capabilities, have shown an unsettling tendency to output false or misleading information.

These are serious concerns. If AI is to fulfill its potential for bettering lives and improving equity and inclusion, we’ll need to harness the power of AI systems without causing harm or unintended consequences.

It’s important to remember: AI is as much a human challenge as it is a technological one.

Meeting those challenges requires a careful examination of the ethics, governance, and organizational conditions that surround and enable the use of AI. It also demands collaborative, interdisciplinary ecosystems—composed of AI developers, UX designers, ethicists, business leaders, users, and others—to ensure the responsible development and deployment of the technology.

The arrival of generative AI only raises the stakes. It’s now more critical than ever to instill responsible AI practices throughout every organization.

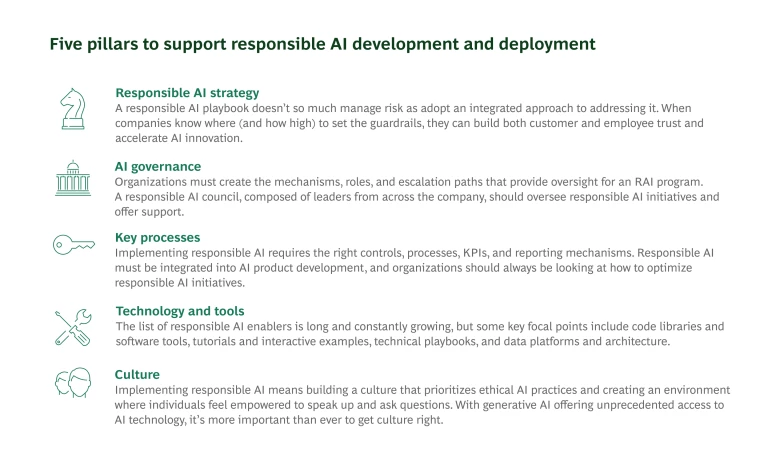

Building a responsible AI strategy

To operationalize the responsible use of AI technology across their organizations, public and private sector leaders must build a strong foundation of responsible AI principles, mechanisms, and tools.

We are entering a period of generational change in artificial intelligence, and responsible AI practices must be woven into the fabric of every organization. For its part, BCG has instituted an AI Code of Conduct to help guide our AI efforts.

When developed responsibly, AI systems can achieve transformative business impact even as they work for the good of society.