In the world of data and analytics , the ability to converse with data using natural language can seem almost like science fiction. But in this article, we highlight how we partnered with Scale AI on a real-life implementation of a generative AI (GenAI) solution to enable business users to access data using natural language, a technique known as text-to-SQL.

Context and Objective

Our journey began with a challenge: How could we empower business users, irrespective of their technical skills, to access and analyze data through simple natural language queries? The goal was to remove barriers to data and enable a culture in which data-driven insights are accessible to anyone.

Our client was a highly regulated entity that had strict regulatory compliance standards they had to abide by. While partnering with their data security teams during the design of our solution, we quickly realized that we could not use closed source models, which would require sending sensitive database information to external APIs. Instead, we decided to push the AI frontier by fine-tuning open-source Large Language Models (LLMs) to be adept SQL “data analysts”.

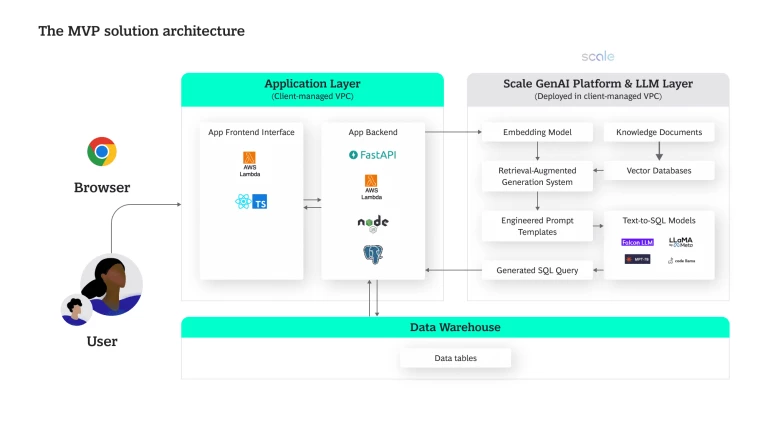

To help our client, we partnered with Scale AI , a leading company fueling the generative AI revolution. Scale provided unique capabilities through their proprietary Data Engine, their full-stack enterprise GenAI platform solution, as well as their expertise to fine-tune, evaluate, host, and deploy the Text-to-SQL models for our client. With Scale GenAI Platform (SGP), the models were hosted on the client’s Virtual Private Cloud (VPC) which ensures sensitive data is secured and empowers the client to manage model behaviors.

Through close collaboration, we turned cutting-edge GenAI technologies into a scalable enterprise Text-to-SQL solution.

High-Level Solution Design

The first step was to design the solution architecture, which included the frontend application, the LLM layer, and the target database. Leveraging the capabilities of Scale's GenAI platform and using public text-to-SQL datasets, we were able to rapidly test a variety of top-performing, open-source models including Llama2, Mixtral, and Code Llama.

In parallel, we defined a compact but representative real-world evaluation dataset that allowed us to rapidly experiment and improve our solution. Soon, however, we encountered several unforeseen challenges.

Tackling Complications Head-On

Benchmarks

During the preliminary phase of experimentation, we initially turned to the most widely recognized public benchmark for both training and evaluation—the Yale Spider Challenge . At first glance, the Spider leaderboard suggested that the text-to-SQL problem is well solved, with teams consistently achieving accuracies above 80%.

This perception proved to be wrong. The Spider dataset, while rigorous, is predominantly tailored towards academic research and fails to reflect the messiness and complexity of a real-world enterprise data model. A real-world enterprise data model might, for example, contain inconsistent data structures from legacy systems, unclear foreign key relationships, semantically ambiguous column names, inconsistent field names, or data quality issues. When we applied the models fine-tuned on Spider, to real-world enterprise databases, the performance dropped sharply, revealing a significant disparity between academic benchmarks and real-world complexity.

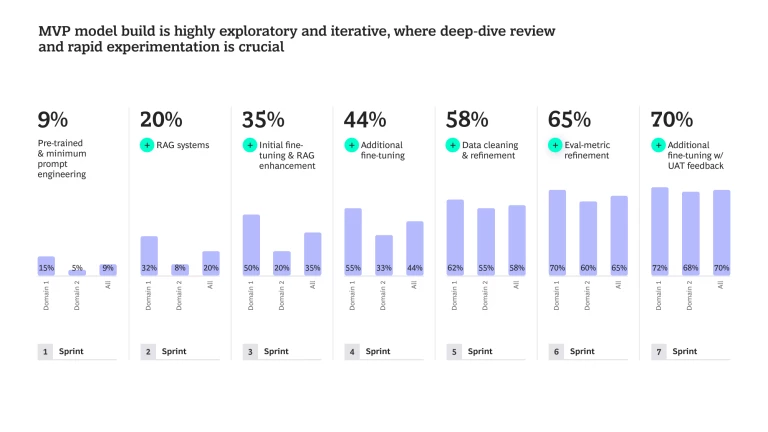

Addressing Accuracy

As shown in Scale’s prior research , simply prompt engineering both open source and closed source models does not provide acceptable accuracy. We bridged this gap by designing a state-of-the-art Retrieval-Augmented Generation (RAG) system. The RAG framework enhanced our text-to-SQL model by injecting pertinent domain knowledge including translations of business terminology, formulas, and database schema details directly into the prompt. We then added model fine-tuning and In-Context-Learning (ICL) examples to further boost accuracy in high complexity requests.

Data Quality

Furthermore, generative AI solutions, when compared to traditional machine learning systems, rely heavily on unstructured data sources often produced by humans. Inherent human subjectivity introduces a significant degree of ambiguity into data. Beyond ambiguity in training and evaluation data, the performance of human generated SQL queries can also cause downstream challenges. Inefficiently written queries, for instance, can lead to extended run times and degrade the overall user experience.

To overcome these challenges and the benchmark disparity, we leveraged Scale’s Data Engine to create customer specific fine-tuning data. We dedicated considerable time and effort to meticulously review and refine the data, and then re-trained the models on this improved data. We then established a robust data management system that has a continuous improvement process designed to incorporate human-in-the-loop data governance.

Model Evaluation

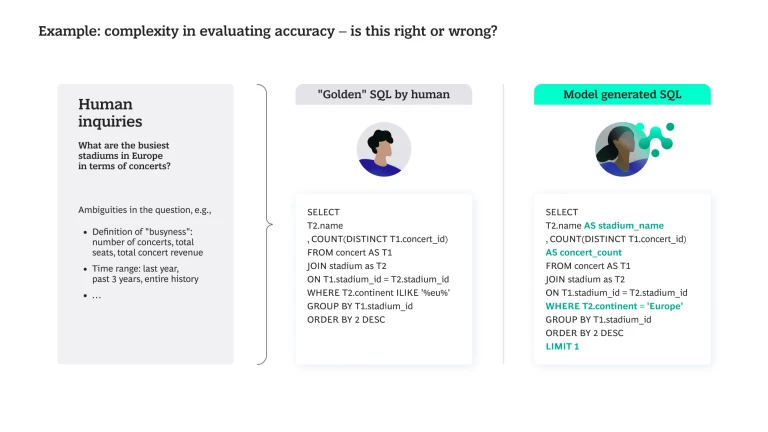

Finding the metric that could accurately measure the output quality proved to be as difficult as it was critical. Traditional accuracy metrics such as Execution Accuracy and Exact Matching could not fully capture the nuance and complexity of user interactions with the system. We needed a metric that could capture both the binary nature of correctness and the nuanced spectrum of human judgment. (See below for an example.)

To evaluate the solution appropriately, we deployed a metric system that covered diverse aspects of "accuracy." The more stringent metrics (e.g., Execution Accuracy) were used to assess baseline accuracy, while custom-built auto-eval metrics leveraging a separate LLM was used to approximate human interpretation. Other metrics such as Exact Matching and Answer Similarity offered additional ways to assess model quality.

Our Approach in Summary

A combination of data enhancement, RAG, and model fine-tuning enabled us to tackle all these issues head-on. By iteratively experimenting and refining, our team was able to improve text-to-SQL model accuracy, reaching acceptable performance levels in a relative short timeframe.

Beyond Technology: Change Management and Responsible AI

This journey wouldn't be complete without acknowledging the human element. Integrating this cutting-edge AI solution into the everyday business user workflows required careful change management and training. Seamlessly partnering with the client team each step of the way, we embarked on a collaborative journey: conducting user interviews, crafting solution features, developing solutions, selecting testers, orchestrating User Acceptance Testing (UAT), planning rollouts, and designing trainings.

Our commitment to Responsible AI was underpinned by a rigorous assessment of RAI risks and the implementation of risk mitigations. Our robust RAI framework and detailed action plans ensured that our solutions not only excelled in their technical prowess but were also ethical, unbiased, and transparent.

What We Learned

Reflecting on our journey, we would like to share a few key takeaways:

- Data is the cornerstone of LLM fine-tuning. It is crucial to establish a robust approach and process to collect/create, review, and enhance training and evaluation datasets.

- Robust and artful LLM model evaluation is critical for GenAI customization. It requires flexibility to encapsulate the binary nature of correctness and nuanced spectrum of human judgment. We deployed a metric system that covered diverse aspects of "accuracy.”

- Supervised Fine-Tuning (SFT) is just the beginning. Limited data can be gathered for initial fine-tuning. Hence, quickly setting up the process to enable continuous learning based on tester/user input is important.

- Actively experimenting and leveraging open-source communities can bring surprises. Newly released models and techniques can lead to solution breakthroughs with little effort.

- A strong RAI framework is crucial for GenAI solution roll-out. Hallucination and inaccuracy are inevitable: Clients must understand the associated risks and then, through change management, design guardrails and implement mitigants.

Conclusion

Text-to-SQL technology holds significant potential for enabling the entire organization to unlock valuable insights from data. But the path to effective implementation is intricate, requiring a tailored approach to design, evaluate, deploy, and scale these solutions. To unlock text-to-SQL’s full value potential, enterprises must also design appropriate change management processes, RAI guardrails, and human-in-the-loop flywheels.