Across industries, AI is the new epicenter of value creation, fueling opportunities and competitive advantage. But as its potential grows, so do concerns about it. Examples of AI behaving badly are common and have raised real fears regarding its effects on fairness and equity, privacy, accuracy, and security. Adding to the mix generative AI (GenAI), a transformative technology that amplifies both the power and risks of AI, only increases the need for sound AI policies. So how do organizations ensure that they can deliver the benefits of AI while mitigating the risks?

The answer is robust AI governance. By establishing, deploying, and—from the C-suite down—evangelizing a responsible AI (RAI) program, organizations can realize the full potential of AI while also building trust and complying with emerging regulations. The time to start is now: our research indicates that reaching high levels of RAI maturity takes two to three years. Waiting for AI regulations to mature is not an option.

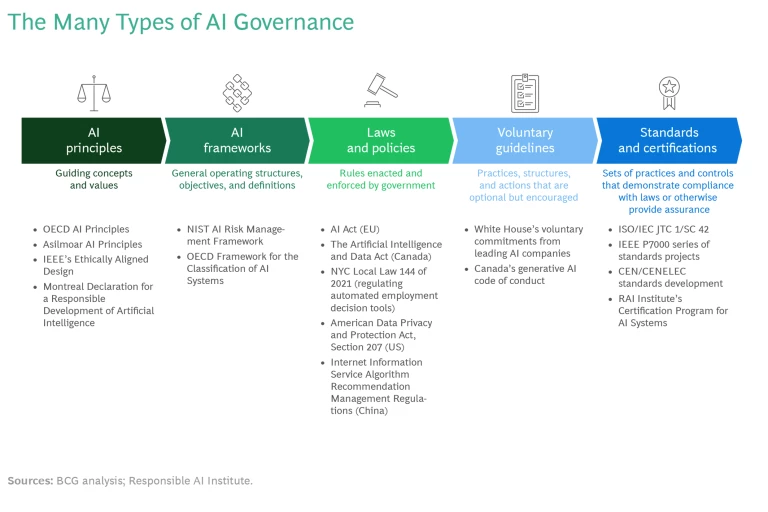

But as business leaders survey the ecosystem of AI governance, they find a confusing hodgepodge of guidelines and frameworks—a lot of mechanisms but not much clarity on how they fit together or which ones are likely to be useful. A new report from the Responsible Artificial Intelligence Institute and Boston Consulting Group, Navigating Organizational AI Governance, provides much-needed insight on these points.

Rules and Recommendations

Each governance mechanism has a distinct scope and purpose. (See the exhibit.) AI principles—promulgated by bodies such as the Organization for Economic Co-operation and Development and the Institute of Electrical and Electronics Engineers—are high-level, aspirational statements that provide direction on themes like fairness and equity, privacy, transparency, and accountability. AI frameworks—such as the AI Risk Management Framework developed by the National Institute of Standards and Technology—turn the focus to implementation, providing operating structures, objectives, and definitions.

Laws and policies establish minimum threshold requirements that businesses must meet. These rules can vary greatly in jurisdiction and in scope. Some—like New York City’s law governing the use of automated employment decision tools—center on a particular capability. Others have a broader impact. For example, the EU’s proposed AI Act covers all AI systems developed, deployed, or used within the EU, regardless of industry. Businesses must understand and track the emerging patchwork of regulations to ensure that they successfully navigate compliance.

Three additional categories round out the list of governance mechanisms: voluntary guidelines, which governments and industry often develop in concert; standards, which can serve as benchmarks for verifying compliance with regulatory requirements; and certification programs, which can demonstrate—to customers, industry partners, and regulators alike—that a company’s AI processes conform with underlying standards.

By understanding the impact and interaction of these mechanisms, organizations can shape their own AI governance more precisely and more effectively. To that end, we’ve found that some best practices can accelerate and smooth the journey.

Getting AI Governance Right

Good AI governance starts at the top, meaning that RAI is a CEO-level issue. It goes directly to a customer’s trust in how a company uses technology, and it addresses organizational and regulatory risks. Setting an appropriate tone from the C-suite is vital to facilitating and supporting a culture that prioritizes RAI. A 2023 survey by MIT Sloan Management Review and BCG found that organizations whose CEOs participate in RAI initiatives realize 58% more business benefits than those whose CEOs are uninvolved.

We recommend that companies establish a committee of senior leaders to oversee development and implementation of their RAI program. The committee’s first task should be to create the principles, policies, and guardrails that will govern the use of AI throughout the organization. These principles may be high-level, but they are essential, serving as a guiding light for developing and using AI appropriately. The most effective principles link to an organization’s mission and values, making it easier to zero in on which types of AI systems to pursue—and which ones not to pursue.

The next step is to establish linkages to existing corporate governance structures, such as the risk committee. All too often, companies inadvertently create a shadow risk function, but linkages help prevent that. They also ensure clear escalation paths and decision authority for addressing potential problems.

Other best practices include developing a framework to flag inherently high-risk AI applications for greater scrutiny, consulting voluntary guidelines for insights on industry best practices, and monitoring high-profile litigation to get a sense of—and start preparing for—where courts may land on legal issues that are still in flux, such as the impact of GenAI on intellectual property rights.

Building a comprehensive RAI program takes time, but savvy companies can speed the journey. It’s a route worth taking. With the right governance, AI can drive value and growth without driving risk.