Regulation of artificial intelligence is gathering momentum around the globe, with more than 800 measures under consideration in 60-plus countries and territories, as well as the EU. The push doesn’t come as a surprise. AI is a powerful tool that can provide substantial benefits to individuals and society, but it can also be a “black box.” The inner workings of AI—and the results it produces—are often hard to fully comprehend. Governments have a clear interest in protecting against AI failures, such as bias in algorithms or outcomes that are incorrect (such as a medical misdiagnosis). Businesses also have an interest, given the growth that AI successes can spark—and that AI failures can dampen.

The surprise, then, is that organizational readiness for AI regulation is limited. BCG’s sixth annual Digital Acceleration Index (DAI), a global survey of 2,700 executives, revealed that only 28% say their organization is fully prepared for new regulation.

But how do you prepare for rules that are just starting to take shape and will likely take different forms in different regions? We’ve found that the best way for companies to prepare for AI regulation is through a responsible AI (RAI) initiative. At the core of RAI is a set of principles that stress accountability, transparency, privacy and security, and fairness and inclusiveness in how you develop and deploy algorithms. It’s a framework for both the creators and users of AI—and will help both groups respond to any regulation that comes down the road.

Many companies view RAI as a compliance matter. But it’s really an enabler, an engine for innovation—one that improves the performance of AI and accelerates the feedback cycle. Businesses that understand this and treat the coming wave of regulation as an opportunity rather than a burden can satisfy lawmakers and shareholders alike.

Getting RAI right is especially important given the new layers of complexity that upcoming regulations are likely to add—such as requiring that businesses explain their AI’s decision-making. But there are actions that companies can take to get on a firm AI footing.

The Clock Has Already Started

In addition to the DAI survey, BCG annually asks executives about RAI through its partnership with MIT Sloan Management Review. One of the central findings of the most recent study is that it generally takes three years to make meaningful progress in developing RAI maturity. While major laws—like the EU Artificial Intelligence Act—are still in the proposal stage, organizations cannot wait for regulations to take effect before they start implementing their own RAI programs.

Competitive pressures and opportunities add to the urgency. RAI helps companies design and operate systems that align with organizational values and widely accepted standards of right and wrong. But it also sparks transformative business impact. RAI helps companies scale their AI efforts, identify and fix problems early, and increase adoption of AI by customers, employees, and other stakeholders. It facilitates innovation that benefits society and the business alike—while minimizing unintended consequences. But already, some businesses are ahead of others.

RAI maturity, we found, corresponds with digital maturity. The companies that ranked in the top quartile of the DAI were nearly six times more likely to be fully prepared for AI regulation than those in the bottom quartile.

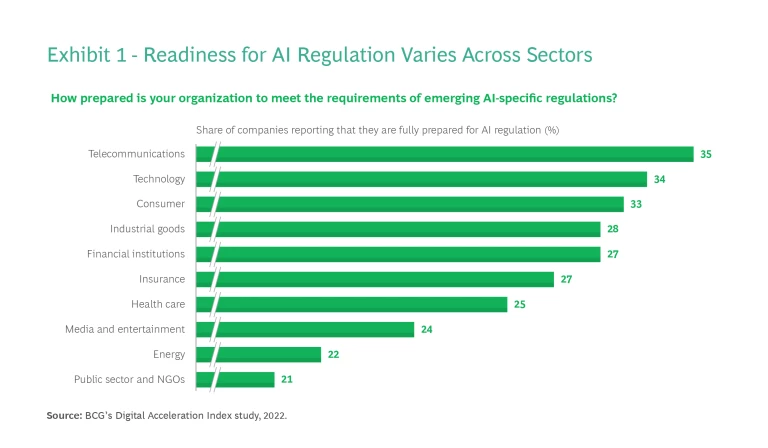

More generally, the telecommunications, technology, and consumer sectors reported the highest levels of preparedness. (See Exhibit 1.) Trailing the field were the energy industry and the public sector. The latter’s ranking should be sobering for lawmakers, as AI failures can erode trust—and government is focused on gaining trust, not losing it.

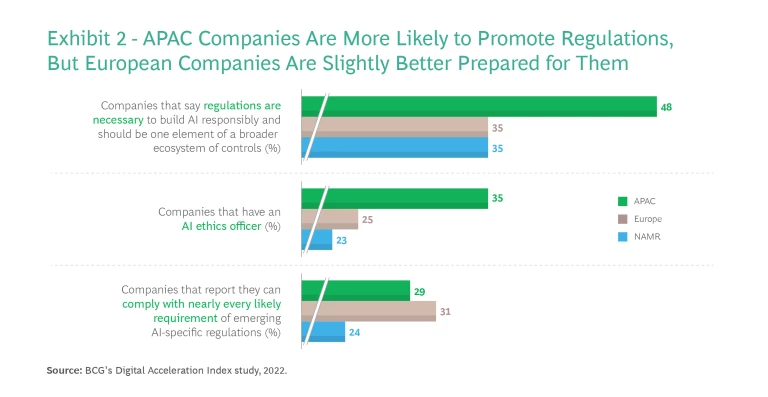

AI preparedness differs among regions as well. Asia-Pacific companies, for example, are more likely than their counterparts in Europe and North America to have an AI ethics officer. Yet a greater share of executives in Europe report that their organizations are prepared for emerging regulation. (See Exhibit 2.) This preparation may originate from the EU’s strong ambition to create the “Brussels effect”— a process of unilateral regulatory globalization caused by the European Union de facto externalizing its laws outside its borders through market mechanisms—and set a global standard in AI regulation, similar to the General Data Protection Regulation (GDPR).

For many companies, these findings should be a call to action. There’s much uncertainty, however, on just what it means—and what it takes—to use AI responsibly: 82% of respondents said they lacked clarity about AI policy and tools. RAI clears the confusion. But given the stakes, business leaders need to be actively involved in an RAI effort. By prioritizing this work, they not only demonstrate to lawmakers, regulators, and the world that they are acting responsibly, but also foster and evangelize RAI strategy and implementation. When executives lead on RAI, they create companies that lead on RAI.

Five Keys to RAI Success

What should that strategy and implementation look like? Business goals and markets will vary—so, too, will AI regulations. In 2019, Singapore instituted an AI governance framework laying out principles, but not penalties, for the private sector. The EU, by contrast, is taking a more aggressive approach. Its proposed legislation would ban practices like biometric mass surveillance (such as using facial recognition technology in public spaces) and credit rating based on social scoring (for instance, looking at an individual’s social media activity when calculating their score). The EU proposal would also impose financial penalties on companies that carry out prohibited practices—up to 6% of a company’s global revenues, drawing from precedents set with the GDPR.

Rules, market dynamics, and company needs and visions related to AI vary widely, so there is no textbook approach to RAI. But we’ve found that organizations that embrace five key principles are better prepared for any AI regulation—and for seizing the full potential of this powerful technology.

Empower RAI leadership. A point person on RAI (typically a chief AI ethics officer, but the title can vary) plays a critical role in keeping initiatives on track. The best RAI leaders are multitalented: well-versed in policymaking, technical requirements, and business needs. They’re able to integrate these elements into a cohesive strategy that generates benefits from AI, adheres to the organization’s broader social values, and ensures compliance with emerging regulations. Successful RAI leaders are also collaborative. They draw upon a cross-functional team (representing business units as well as legal, HR, IT, and marketing departments) in designing and leading RAI programs. These relationships help to enable RAI leaders, providing the mandate and support to implement RAI programs across the organization.

Build and instill an ethical AI framework. At the heart of RAI is a set of principles and policies—an ethical AI framework—that companies create and infuse into their culture. This lays a robust foundation for meeting new requirements—whatever form they take—without hampering goals and investments. Consider, for example, regulations governing the use of facial recognition technologies. Requirements such as those found in the Biometric Information Privacy Act in the US and the proposed EU Artificial Intelligence Act will vary. For a company operating across jurisdictions, an ethical AI framework—promoting investment in and use of bias mitigation solutions, strong privacy protection, clear documentation, and other measures—makes it easier to meet the obligations of different regulatory schemes. That’s because at their core, all these regulations share one overriding goal: ensuring a responsible approach to AI. And a strong ethical AI framework puts you on that path.

Two-thirds of companies in the DAI survey are already taking important steps towards creating such a framework. This includes embracing a purpose-driven approach to building AI applications: ensuring that designers understand, right from the start, the goals of a system and the outcomes it generates. Other measures include transparency—for example, informing users how AI systems utilize their data and making the algorithms less of a black box. When companies can explain how their AI works, they build trust. They also boost adoption of AI systems by customers and employees who use them. Effective training (promoting awareness of RAI practices and goals across the organization) and communications (getting out the main messages about RAI to internal and external audiences) further enhance transparency. The most digitally mature companies—who are also the most mature in RAI—are nearly three times more likely than their peers to consider such practices highly important and consistently apply them across the company.

Accountability, transparency, privacy, security, fairness, and inclusiveness are the core principles of responsible AI.

Bring humans into the AI loop. Most current and proposed AI regulations call for strong governance and human accountability. This aligns with a core tenet of RAI: human involvement in AI processes and platforms, a concept that has solid support. Nearly two-thirds of companies believe it’s important to design AI systems that augment, but do not replace, human capacity—and they are already applying that principle. But how they apply it makes all the difference. Feedback loops, review mechanisms, and escalation paths to raise concerns should be integral components of any RAI program. Here, too, the most digitally mature firms are already taking the lead: they’re more than twice as likely as their peers to place a high degree of importance on these practices and consistently apply them.

Create RAI reviews and integrate tools and methods. RAI spans the lifecycle of an AI system. The goal is to catch and resolve problems as early in development as possible and be vigilant through, and after, launch. Organizations that can monitor AI effects across the lifecycle will win trust from governments and customers alike. This starts by ensuring that the core RAI principles, such as transparency and inclusiveness, are embedded in processes for building and working with algorithms. Next, companies should build or adopt a tool for conducting end-to-end reviews, not only of the algorithms but also of business processes and outcomes. These reviews should be continuous: performed through development, prototyping, and deployment at scale.

Participate in the RAI ecosystem. Nearly 9 of 10 companies in the DAI survey actively contribute to an RAI consortium or working group. That’s a sound strategy, as it fosters collaboration and sharing that can spotlight—and spread—best practices on RAI. Think of it as crowdsourcing that helps you both prepare for AI regulation and improve the performance of your AI systems. Indeed, for savvy players, getting involved can mean getting ahead. The RAI ecosystem is vast and rapidly growing, a natural place to find partners, customers, organizations, and forums that can help you develop and scale AI use cases. Through shared experiences, the ecosystem can provide insights on mitigating the risks and maximizing the rewards of AI. And it’s a venue for an even greater collaboration: an entire ecosystem coming together to address societal concerns proactively.

Using AI responsibly and creating value with AI walk hand in hand. Most companies understand that RAI practices are important and want to embed them in their business. But the gap between aspiration and implementation is often wide. Uncertainty over the scope of coming rules should not be an excuse to delay. RAI takes time to get right, but by starting now, companies can help shape the regulatory landscape, instead of being overwhelmed by it. They can improve the performance of their AI and minimize system failures—fostering trust and, at the same time, growth.

The authors thank their BCG colleagues François Candelon, Sabrina Küspert, and Laura Schopp for their contributions to this article.