Generative AI is rapidly increasing efficiency, productivity, and quality across industries and, in the process, uncovering new and innovative revenue streams. From deploying GenAI in everyday tasks to inventing new business models for entire functions from customer service to engineering, companies that implement GenAI stand to gain clear market advantage. Achieving full value from GenAI applications, however, requires the careful implementation of a combination of human-based plus automated testing and evaluation (T&E). Only then can companies be sure that their GenAI applications maximize value and minimize risk.

Transitioning from POC to Market-Ready Enterprise Solution

As companies integrate GenAI into their tools and operations, they are discovering a critical gap between developing innovative proofs of concept (POC) and launching them into the market as reliable, enterprise-level solutions that drive tangible impact. A key demand of such scaling is that GenAI systems operate as intended, producing accurate and reliable outputs and demonstrating:

- Proficiency: Consistently generating intended value for users and avoiding incomplete or inaccurate responses that may lead to poor decision making, ultimately reducing both performance and end-user adoption

- Safety: Preventing trolling or malicious use, and ensuring that users don’t inadvertently trigger harmful outputs as a result of input errors or system inability to handle language idiosyncrasies

- Equality: Ensuring that historical social bias does not lead to unequal access to resources or promote harmful stereotypes; accommodating and fairly representing marginalized groups

- Security: Safeguarding against bad actors and protecting sensitive private data, proprietary data, and system details that could enable cyberattacks; producing secure code devoid of security vulnerabilities

- Compliance: Adhering to relevant legal, policy, regulatory and ethical standards per region, industry, and company

Human Testing Is No Longer Enough

To reap the full benefits of enterprise-level GenAI systems, companies must scale their current approaches to testing and evaluation. The challenge is that as GenAI systems evolve and are able to manage a broad range of use cases, the associated risks also increase and evolve. Human-based T&E alone is not powerful enough to map such a rapidly increasing risk landscape. At the same time, automated T&E can execute at a very high level, but it cannot fully capture the human nuances, insights, and expertise necessary for effective risk management. The solution lies in leveraging the unique strengths of both humans and machines.

Human testing, for example, may reveal a specific user input that yields a toxic output. Automated testing can then create hundreds or thousands of variations of that input to measure how often that failure is likely to occur. In this example, the human has played a vital role in discovering the fault but would need hours or days to compile a similar list of input variations. Automated testing can accomplish the task in a matter of minutes.

In the “Human + Machine” T&E scenario, humans contribute the ability to:

- Provide ground truth data to improve evaluation of GenAI systems

- Map the risk landscape through open-ended, exploratory red teaming

- Leverage contextual learning to develop strong, targeted tests and validate failure severity

- Set and shift priorities as new issues and opportunities arise

- Use creativity to identify novel threats outside the set scope of investigation

… while automated testing:

- Systematically and comprehensively tests and identifies known vulnerabilities

- Rapidly executes high-volume testing across a wide range of use cases or attack scenarios

- Continuously tests vulnerabilities across the development lifecycle, allowing for immediate detection and mitigation of such security concerns

GenAI Must be Challenged, Assailed

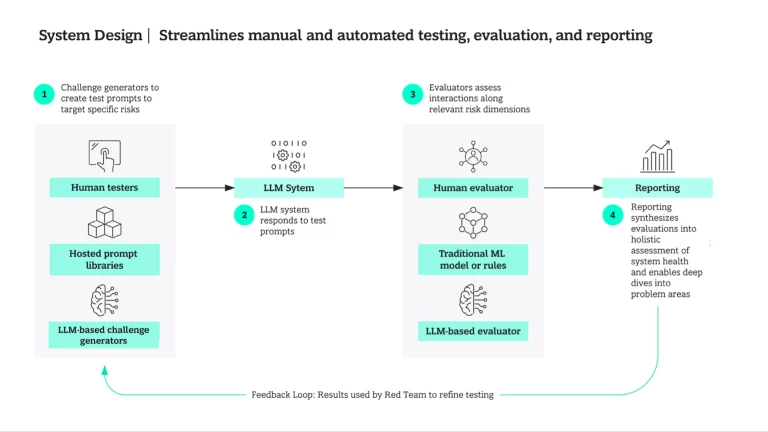

The T&E process is often accomplished using red teaming, defined as the organized process of probing, testing, and attacking GenAI systems from an adversarial stance. To make sure these systems both deliver maximum value and are safe, data scientists and engineers need tools that enable them to conduct red teaming using this combination of human-based and automated testing.

ARTKIT , an open-source T&E toolkit by BCG X , helps BCG clients conduct red teaming. A BCG client recently used ARTKIT to subject a chatbot that automatically processes specific HR requests from among its 26,000 employees to both human-based and automated testing. The toolkit’s proficiency tests identified nonsensical responses to specific, well-intentioned questions and ultimately led to meaningful improvements in response accuracy and completeness. It simultaneously observed that existing guardrails were far too stringent which sacrificed usability by refusing both malicious and well-intentioned prompts. Iterative evaluation eventually helped the client find and maintain the right balance between safety and function.

ARTKIT is also used to scale testing of extended, multi-turn interactions between GenAI systems and end users. GenAI systems can have difficulty maintaining context, coherence, and appropriate responses across multi-turn interactions. A user might introduce new information, changes of subject, contradictions, ambiguities, or combine text and images across multiple turns. Research has also shown that GenAI systems are more likely to fail as the length of a conversation increases due to inherent challenges in managing context with long interaction histories. Inconsistent responses or loss of context by the GenAI system can frustrate users, reduce the effectiveness of the extended interaction, and reduce confidence in system reliability.

Get GenAI Apps to Market Quickly and Proficiently

A hybrid solution like ARTKIT is a key enabler for successful test automation. Data scientists and engineers also need critical thinking, creativity, and an understanding of the full risk surface of their use case and domain to quickly identify issues and proactively derisk builds. This, in turn, can lead to accelerated user acceptance and greater confidence in the final product. The end goal is to help business decision makers and leaders harness the full power of GenAI and our BCG RAI framework, knowing that the results will be safe and equitable — and will deliver measurable, meaningful business impact.