A new BCG survey of large organizations found that almost half of those that believe they have a mature implementation of a responsible artificial intelligence (RAI) program are, in reality, lagging behind. Even organizations that reported rolling out AI at scale overestimated their RAI progress: less than half have a fully mature RAI program. This finding is particularly important because an organization cannot achieve true AI at scale without ensuring that it is developing AI systems responsibly.

The Four Stages of RAI Maturity

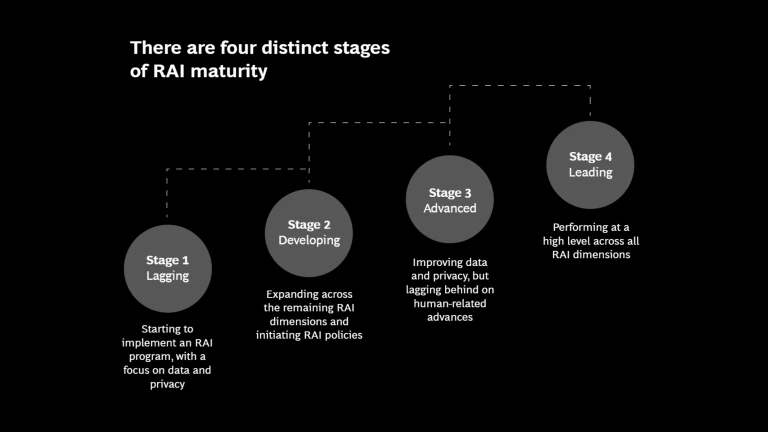

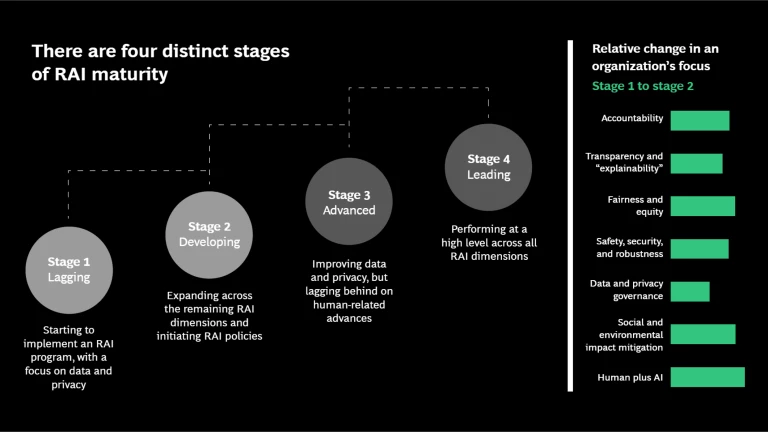

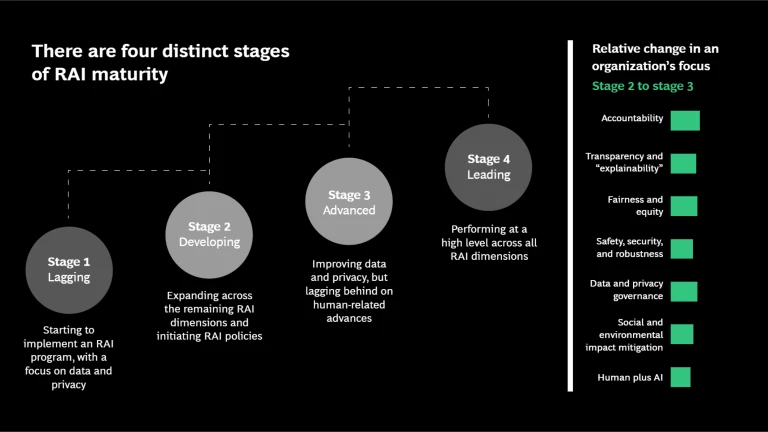

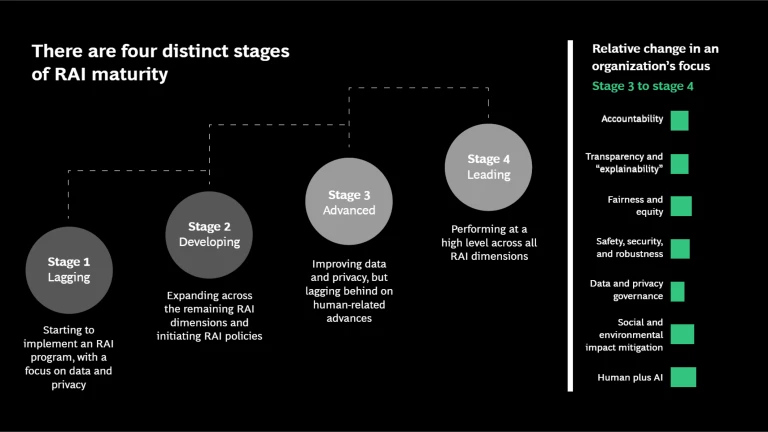

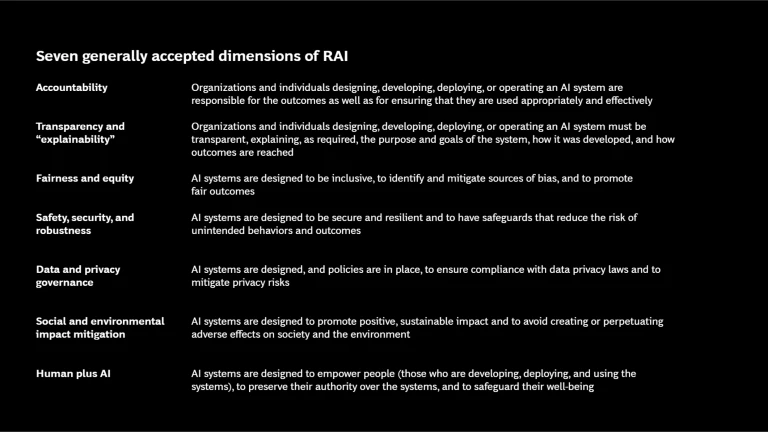

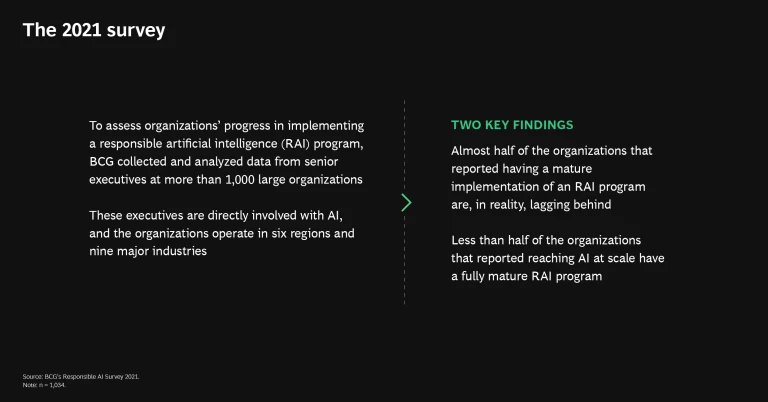

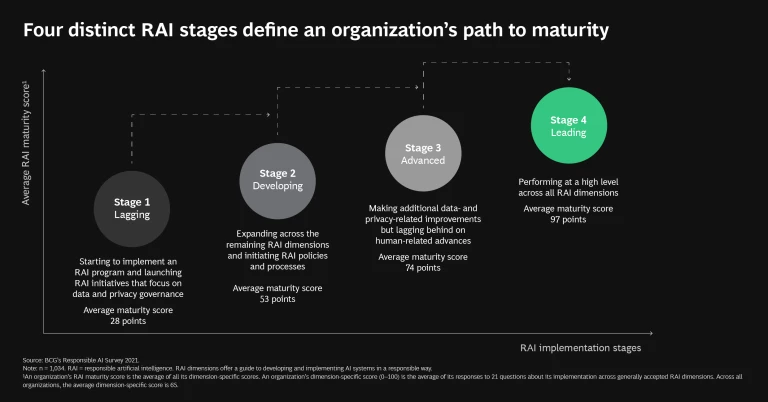

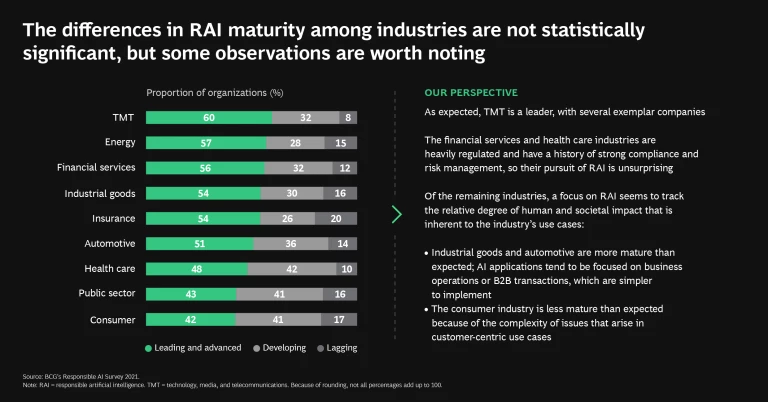

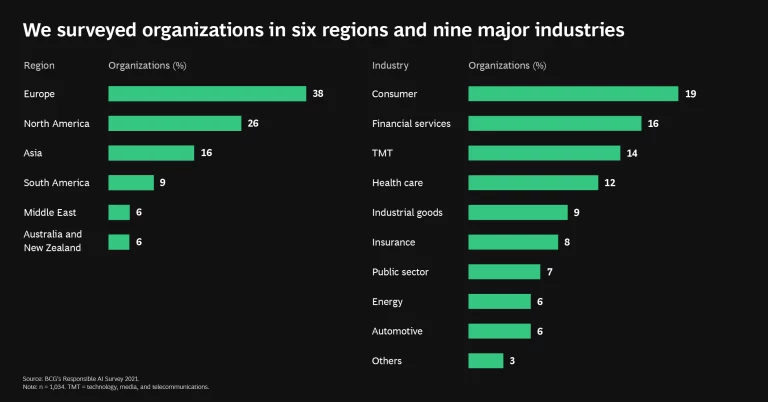

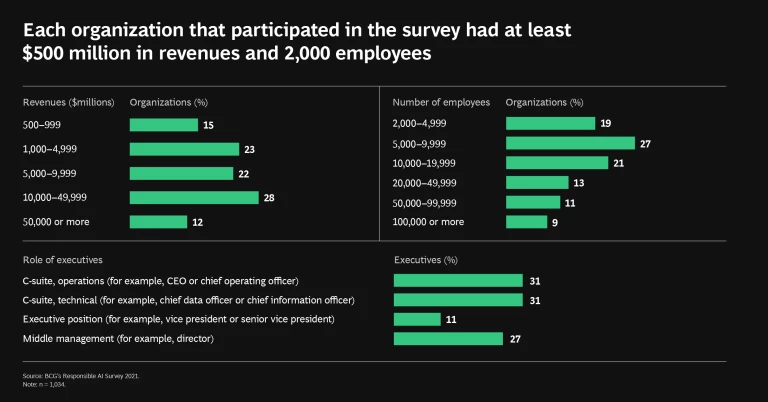

To assess organizations’ progress in implementing RAI programs—the structures, processes, and tools that help organizations ensure their AI systems work in the service of good while transforming their businesses—we collected and analyzed data from senior executives at more than 1,000 large organizations. (See the sidebar “Our Survey Methodology.”) We then categorized these organizations into four distinct stages of RAI maturity: lagging (14%), developing (34%), advanced (31%), and leading (21%). An organization’s stage reflects its progress in reaching maturity across seven generally accepted dimensions of RAI. These dimensions include fairness and equity, data and privacy governance, and human plus AI. The latter one is to ensure that AI systems are designed to empower people, preserve their authority over AI systems, and safeguard their well-being.

The organizations that are in the leading stage have reached maturity across all the dimensions. These organizations have defined RAI principles as well as achieved enterprise-wide adoption of RAI policies and processes. These organizations are clearly making the most of their relationship with AI.

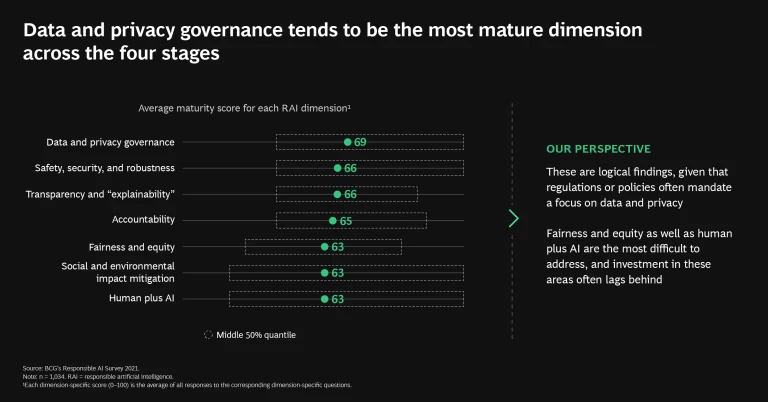

As organizations progress from lagging to leading, each stage is marked by substantial accomplishments, particularly in the areas of fairness and equity as well as human plus AI. This finding is important because organizations’ RAI programs don’t tend to initially focus on these dimensions, and they are the most difficult to address. Accomplishments in these areas are therefore highly indicative of broader maturation in RAI, and they signal that an organization is ready to transition to the next stage of maturity. Meanwhile, organizations consistently focus first on the area of data and privacy governance. This is a logical result, given that regulations and policies often mandate this focus.

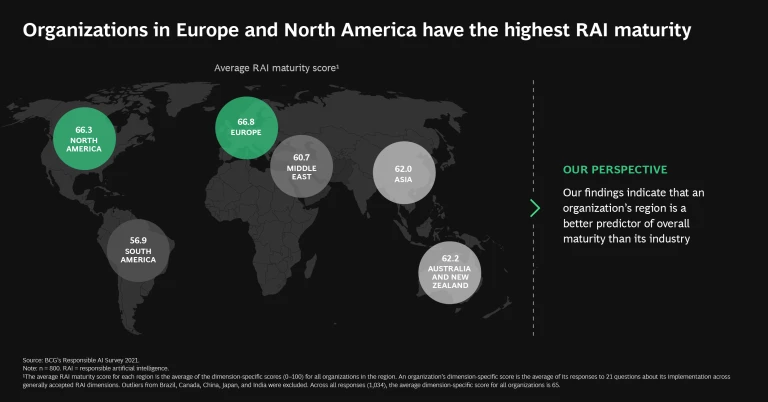

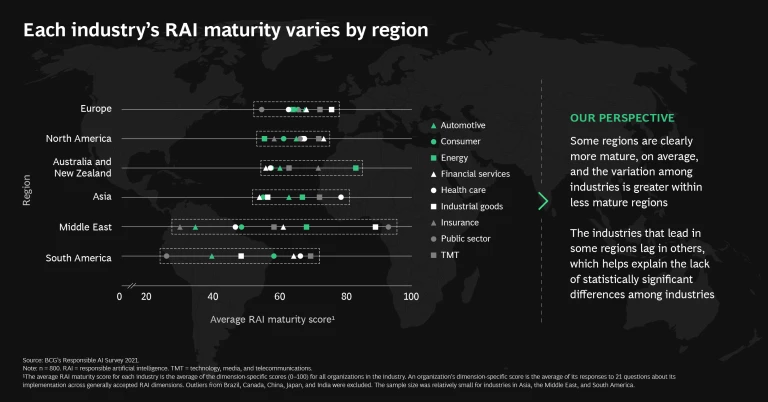

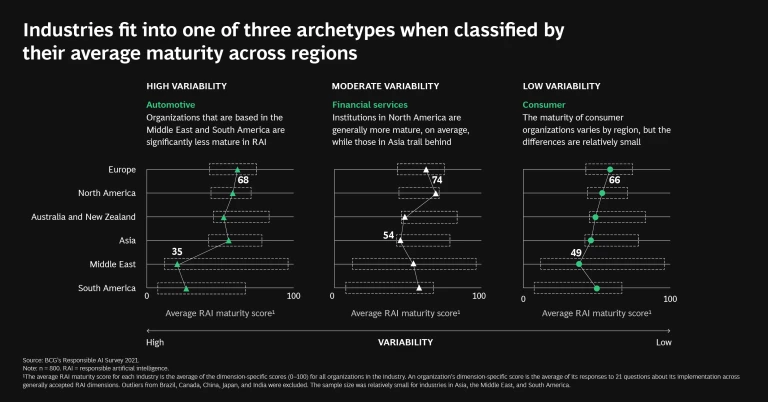

When looking across industries and regions, in turn, we found that an organization’s region is a better predictor of its maturity than its industry: Europe and North America, respectively, have the highest average RAI maturity. In contrast, we found few significant differences in maturity across industries, although a higher concentration of RAI leaders can be found in the technology, media, and telecommunications industry and in industrial goods.

Organizations’ Perceptions Often Do Not Match Reality

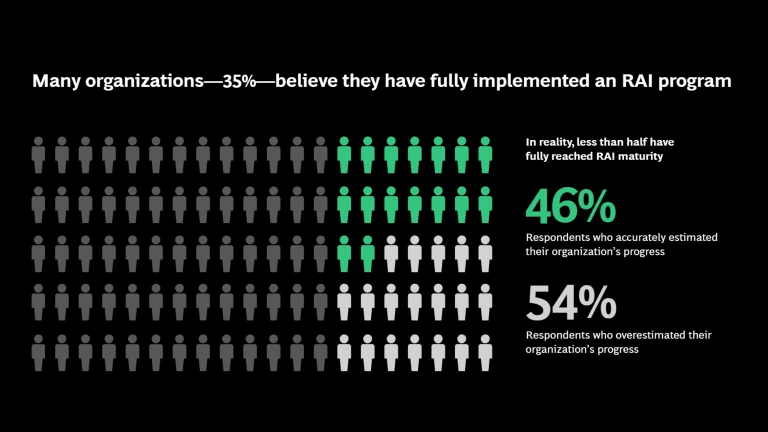

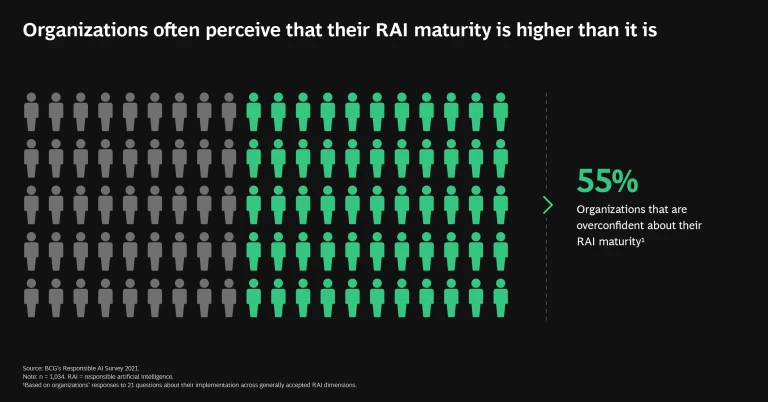

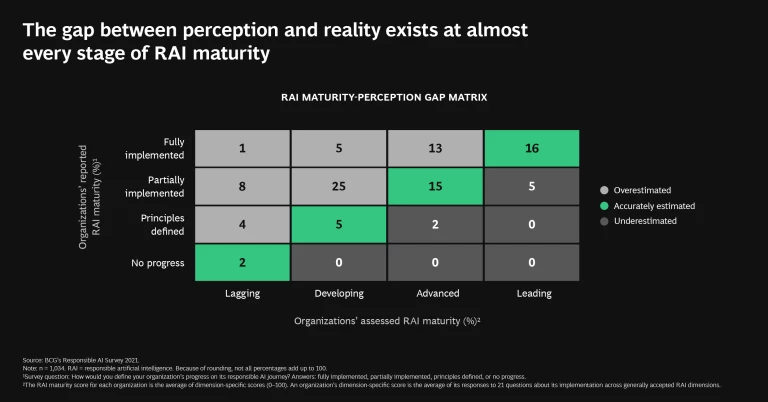

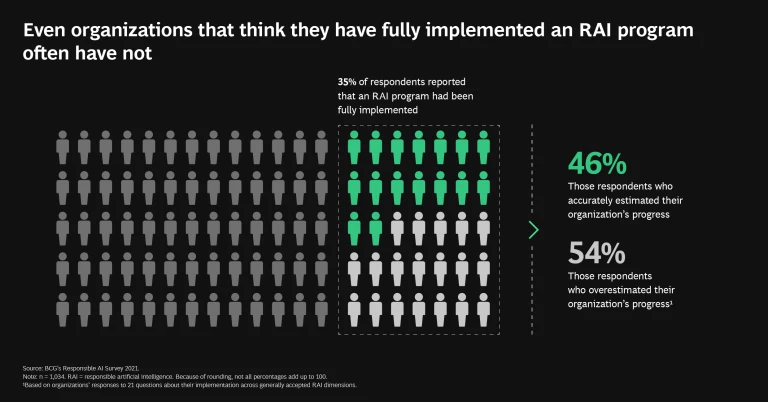

The survey reveals that many organizations overestimate their RAI progress. We asked the executives how they would define their organization’s progress on its RAI journey, whether it had made no progress (2% of respondents), had defined RAI principles (11%), had partially implemented RAI (52%), or had fully implemented RAI (35%). We then compared each executive’s response with our assessment of the organization’s maturity. Our evaluation was based on respondents’ answers to 21 questions about their implementation across the seven dimensions.

The results are surprising. We found that about 55% of all organizations—from laggers to leaders—are less advanced than they believe. Importantly, more than half (54%) of those that believe they have fully implemented RAI programs overestimated their progress. This group, in particular, is concerning. Because they believe they have fully implemented RAI programs, they are not likely to make further investments, although gaps clearly remain.

We also found that many organizations with advanced AI capabilities are behind in implementing RAI programs. Of the organizations that reported they have developed and implemented AI at scale, less than half have RAI capabilities on a par with that deployment. Achieving AI at scale not only requires building robust technical and human-enabling capabilities but also fully implementing an RAI program. For these organizations, falling short of full maturity across all RAI dimensions means that they have still not achieved their perceived level of at-scale AI deployment.

RAI Is Much More Than Risk Mitigation

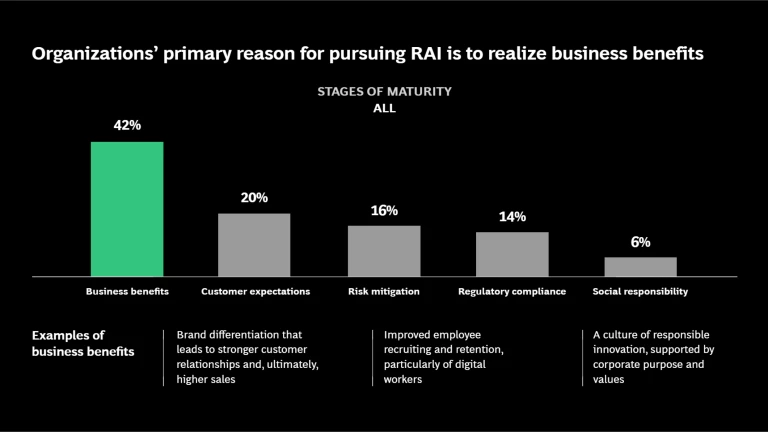

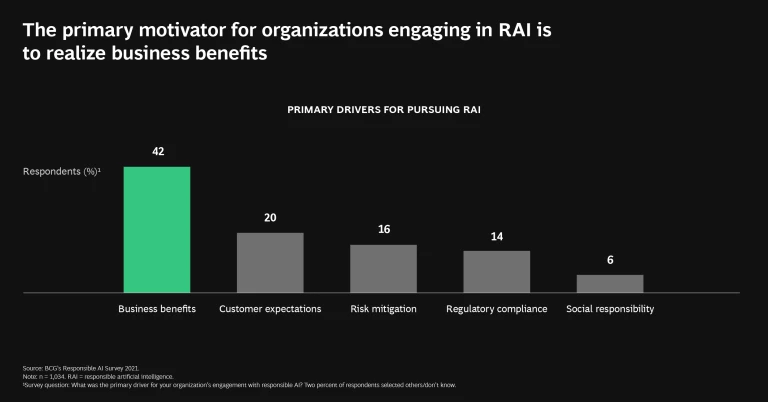

Although C-suite executives and boards of directors are concerned with the organizational risks posed by a lapse of an AI system, we have argued that businesses should not pursue RAI simply to mitigate risk. Instead, organizations should view RAI as an opportunity to strengthen relationships with stakeholders and realize significant business benefits.

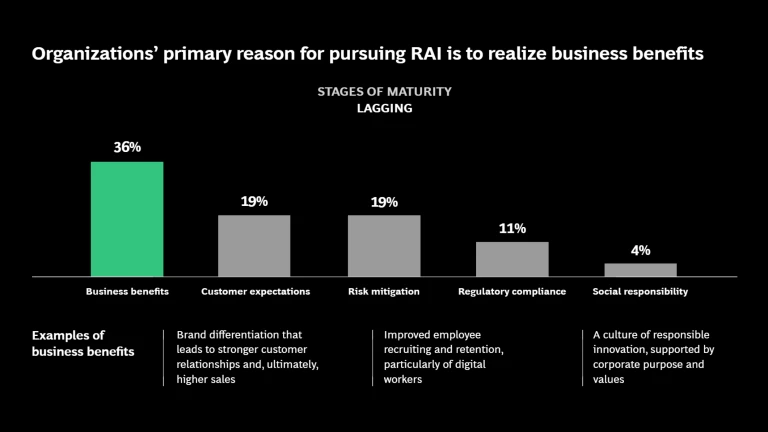

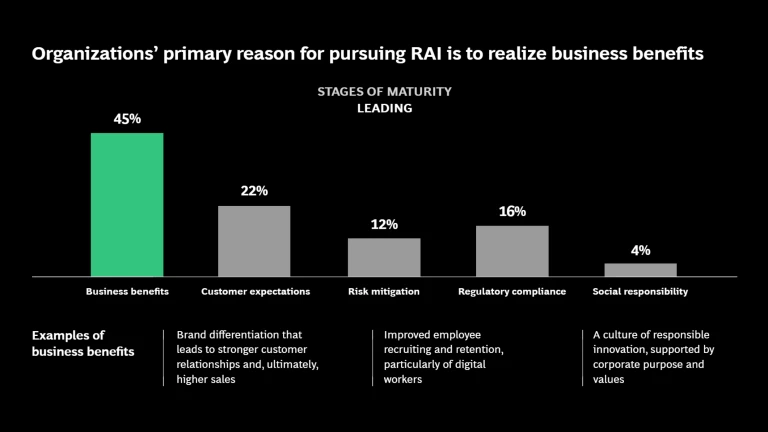

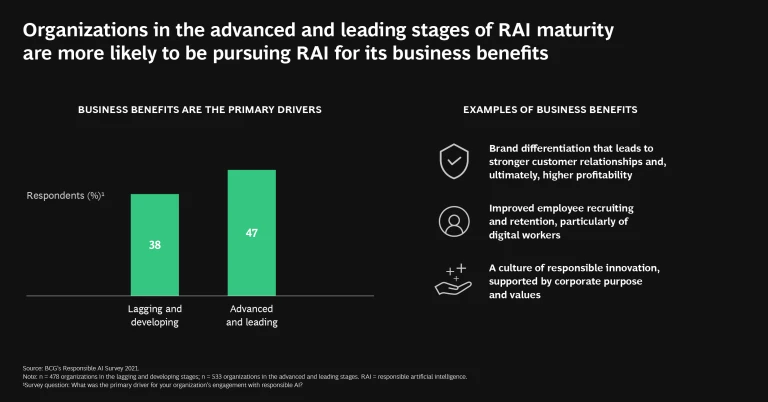

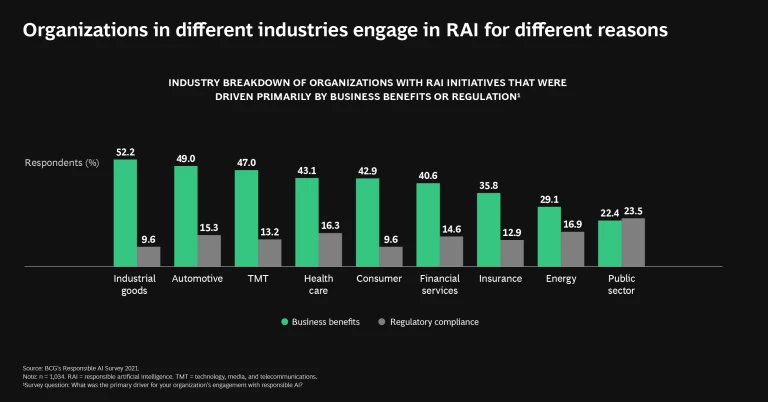

It seems that most organizations agree. When asked to select the primary reason for pursuing RAI, more than 40% chose its potential business benefits—more than twice the percentage that selected risk mitigation. Moreover, we found that as organizations’ RAI maturity grows, so does their motivation to capture business benefits through RAI. Simultaneously, the focus on risk mitigation decreases.

Best Practices for Reaching RAI Maturity

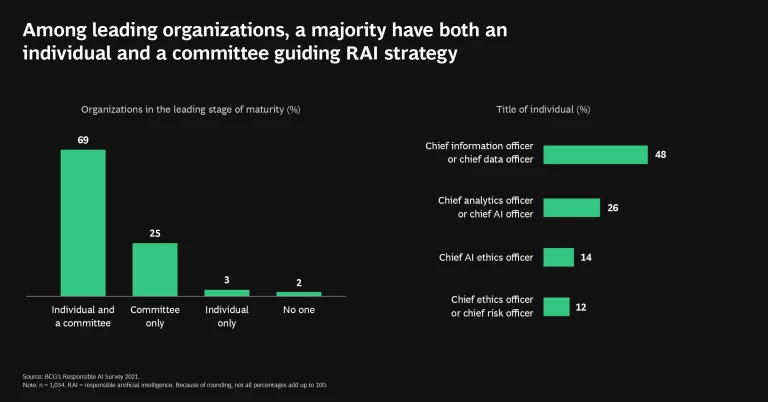

RAI leaders consistently have policies and processes that are fully deployed across their organizations covering all seven RAI dimensions. At these leading organizations, we found several key markers that are indicative of broader RAI maturity.

- Both the individuals responsible for AI systems and the business processes that use these systems adhere to their organization’s principles of RAI.

- The requirements and documentation of AI systems’ design and development are managed according to industry best practices.

- Biases in historical data are systematically tracked, and mitigating actions are proactively deployed in case issues are detected.

- Security vulnerabilities in AI systems are evaluated and monitored in a rigorous manner.

- The privacy of users and other people is systematically preserved in accordance with data use agreements.

- The environmental impact of AI systems is regularly assessed and minimized.

- All AI systems are designed to foster collaboration between humans and machines while minimizing the risk of adverse impact.

Organizations that do not follow these practices or do not have them fully deployed are most likely not leading in RAI and should dig more deeply into their RAI efforts. Even for those that do, digging deeper into their efforts to look for further opportunities to improve is important.

For more detail on our survey results, see the following slideshow.

Our Survey Methodology

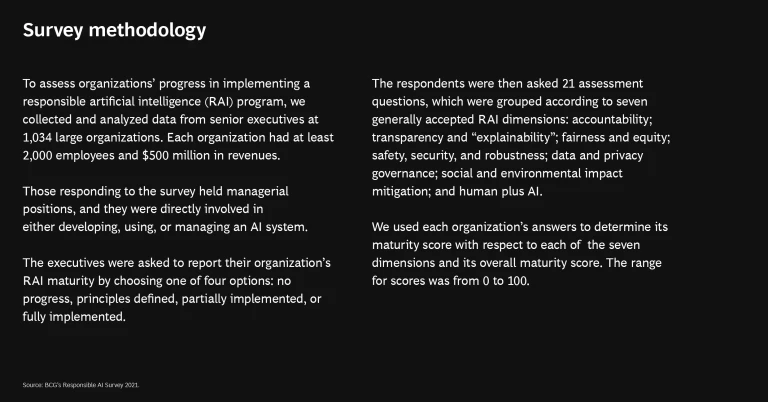

The participants were asked to define their organization’s overall progress in implementing an RAI program by choosing one of four options: no progress, principles defined, partially implemented, or fully implemented. In addition, each of the participants answered 21 assessment questions, grouped according to the seven RAI dimensions: accountability; transparency and “explainability”; fairness and equity; safety, security, and robustness; data and privacy governance; social and environmental impact mitigation; and human plus AI.

We also performed a cluster analysis of the responses to the 21 questions and grouped organizations by their maturity stage. We labeled these groups lagging, developing, advanced, and leading.

In addition, we computed an aggregated maturity score for each organization (ranging from 0 to 100) that summarizes the overall maturity of each organization. We also computed maturity scores for each of the seven RAI dimensions on the basis of the corresponding assessment questions. The aggregated and dimension-specific maturity scores allowed us to analyze results across organizations, explore differences across regions and industries, and understand the typical journey that an organization takes as it matures in its RAI implementation.