Get a jump on new requirements, including the upcoming EU AI Act, by adopting BCG’s Responsible AI Leader Blueprint.

New artificial intelligence regulations are imminent, but organizations can get a head-start by implementing responsible artificial intelligence (RAI) today. As companies deploy transformative AI tools, they must ensure that they introduce these solutions in a responsible way, to mitigate any potential risks to their business and protect consumers. Neglecting to address RAI concerns exposes businesses to reputational damage, legal action, and a loss of consumer trust that is hard to regain. But RAI should not be viewed purely as a defensive gambit—it is also a source of positive value for organizations. The business potential of RAI is substantial: it can lead to better products, increased profitability, improved recruitment and retention of staff, sharper decision making, and a more durable culture of innovation.

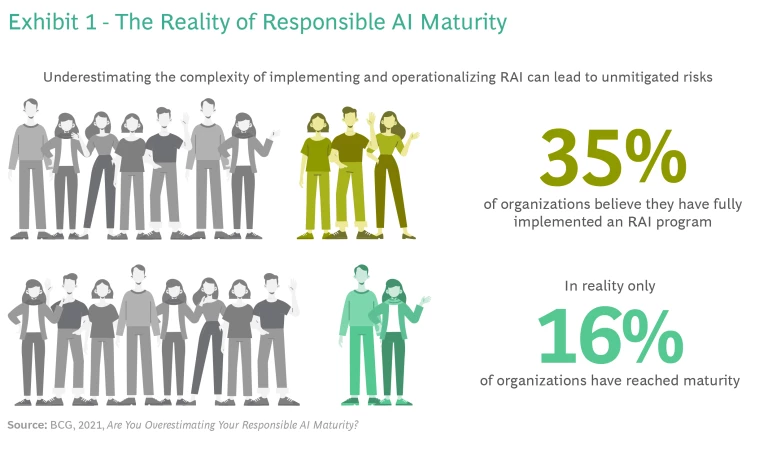

Despite the promise of RAI and the risks of inaction, many organizations have struggled to put RAI principles into practice in a coherent and comprehensive way. And there is a gap between where organizations think they are in their RAI journey and where they really are: 35% of organizations believe they have fully implemented an RAI program, but, in fact, only 16% have reached maturity. (See Exhibit 1.) With the imminent arrival of the European Union’s AI Act, one of the first broad-ranging regulatory frameworks on AI, the failure to successfully implement RAI will have more serious

It is not just organizations based in the EU that need to pay attention. The regulation will apply to any provider that implements or develops AI systems in the EU or whose AI systems produce outputs that are used in the EU’s jurisdiction, so it will affect many organizations based elsewhere. Moreover, the regulation, which is expected to come into force in 2023, is likely to bear similarities to rules currently being drawn up by other government authorities throughout the

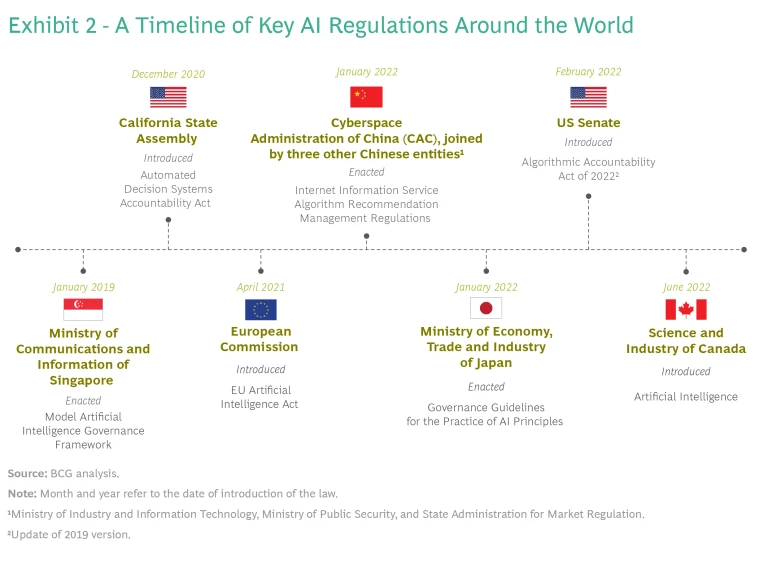

Given the impending heightened focus on new regulations, as well as the potential financial and reputational damage resulting from noncompliance, organizations urgently need to adopt measures that enable them to comply with the requirements of the emerging EU regulation. A comprehensive RAI program, based on BCG’s Responsible AI Leader Blueprint, will allow them to act in accordance with and adapt to the proposed EU AI Act and other regulations that will inevitably follow (such as the Algorithmic Accountability Act of 2022 in the

Putting in place a comprehensive program to implement and operationalize RAI throughout an organization takes time, but significant progress can be made with a few basic steps to secure early wins and build confidence in the organization. To position themselves to begin building this framework, organizations should take four key actions: (1) establish responsible AI as a strategic priority with senior-leadership support, (2) set up and empower RAI leadership, (3) foster RAI awareness and culture throughout the organization, and (4) conduct an AI risk assessment.

The Call for Responsible AI Intensifies

The development and adoption of AI have been expanding rapidly, enabling organizations in many industries to transform their capabilities. According to joint research by BCG and the Massachusetts Institute of Technology Sloan Management Review (MIT SMR), global investment in AI reached $58 billion in 2021.

The COVID-19 pandemic contributed greatly to the increased focus on AI, as organizations reacted to the digitization of working arrangements and consumer behavior engendered by the crisis. New applications and developments flourished, accelerating the adoption of AI in sectors such as health care.

While AI has great potential, it also raises concerns related to accountability, transparency, fairness, equity, safety, security, and privacy. An RAI program is one way for organizations to systematically address these challenges. BCG defines RAI as developing and operating artificial intelligence systems that align with organizational values and widely accepted standards of right and wrong, while achieving transformative business impact.

While AI has great potential, it also raises concerns related to accountability, transparency, fairness, equity, safety, security, and privacy.

Organizations that implement RAI programs effectively can derive many benefits. RAI differentiates the brand, strengthens customer acquisition and retention, and improves competitive positioning, thereby leading to higher long-term profitability. It also assists with workforce recruitment and retention, as increasingly socially conscious employees want to work for organizations they can trust and believe in. Moreover, it gives rise to a culture of innovation that can be sustained over time. In general, investing in the development of a mature RAI program leads both to fewer AI failures and to more success in the scaling of AI, which delivers long-term sustainable business value for the whole organization.

Despite the clear potential and burgeoning investment in this field, however, many organizations have struggled to deploy or scale RAI in practice. According to a BCG survey, 85% of organizations with AI solutions have defined RAI principles to shape product development. With few exceptions, though, the good intentions have yet to be translated into rigorous practical outcomes. Only a small fraction (20%) of organizations have fully implemented these principles. Those that fall behind face significant long-term risks.

The widespread failure to implement RAI limits the overall impact of AI, preventing organizations from capturing its full business potential and thus limiting their capacity for growth. Moreover, major risks for organizations, customers, and society at large go unaddressed.

AI is likely to be one of the global developments with the greatest impact during the coming decades. Consequently, there is a widespread and growing expectation within society that AI products should be built in a responsible and ethical way. When the EU’s General Data Protection Regulation (GDPR) took effect in 2018, it generated an awareness about privacy requirements throughout Europe and across the world. Soon, consumers began to demand an ecosystem-wide change with stronger privacy protections in consumer products and services. This development laid the foundations for RAI through requirements such as the right to explanation.

In the face of such expectations, several instances of troubling consequences of AI use have hit the headlines in recent years, posing reputational risk for the organizations involved. For example, certain algorithms have resulted in discriminatory practices owing to an underlying prejudice in the input data.

In one case, an algorithm used by Amazon as a recruitment tool was shown to be biased against women because the AI system was observing data submitted by mostly male applicants over a ten-year period; as a result, the algorithm taught itself that male applicants were preferable. When these issues were discovered, Amazon stopped using the AI solution, according to a 2018 report by Reuters.

In a case described by Axios in 2020, students in the UK were unable to take the usual A-level examinations because of the pandemic, so an algorithm was used to award scores instead. The algorithm was found to be biased toward students from wealthier schools, and the results had to be scrapped.

The Advent of Comprehensive AI Regulation

To date, more than 60 countries as well as some international organizations have approved voluntary principles and standards to guide AI usage and development. Two prominent ones are the OECD Principles on Artificial Intelligence and the Draft Risk Management Framework by the National Institute of Standards and Technology in the US. (See Exhibit 2.) Formal laws have not yet been forthcoming, however. Widespread government focus is now trying to rectify this situation, driven at least in part by the highly publicized lapses of AI systems and the harms they can create for citizens and society.

The EU AI Act: The First Broad Regulatory Framework on AI

One consequential development is the proposed EU AI Act. The proposal, which was unveiled in April 2021, sets out different rules depending on the level of risk attributed to a particular use of AI and defines four such risk levels.

The proposed act imposes stiff fines that constitute a material risk to a business, reaching a maximum of 6% of global annual turnover or €30 million (whichever is higher) for those in breach of its provisions.

The regulation is expected to enter into force in 2023 with a transitional period extending to 2024, during which the standards would be developed and mandated, and governance structures would be established. By late 2024, the regulation could become applicable with requirements for conformity assessments to validate that AI products meet the requirements defined in the regulation. Academic researchers have already started proposing conformity assessment frameworks, such as capAI, to help organizations achieve compliance with these requirements.

Leaders need to use this transition period to prepare their organizations for the imminent regulation, working to understand the full range of its implications and taking the necessary steps in response. Given the breadth of the requirements, organizations cannot wait to act until the law goes into effect. It is not just European organizations that must meet this regulatory challenge. The regulation has a global scope because it will apply to any provider that puts an AI system into service in the EU or any system that produces outputs used in the EU. It is worth noting that GDPR also had a global impact even though it involved a narrower geographical scope, as it was easier for multinational companies to adopt a single standard rather than try to manage a global patchwork. Organizations might adopt the same strategy for the EU AI Act.

To satisfy regulatory requirements, organizations will need a comprehensive RAI program. Although the requirements are still evolving, several elements will be common across jurisdictions, including fairness and the reliability and conceptual soundness of models. (See Exhibit 3.) Implementation details, such as the specific sections to be included in documentation, may evolve, but the fundamental processes and governance that are needed to ensure compliance will not change and are the hardest and most time-consuming to build. Organizations need to start right away.

Introducing a Holistic Responsible AI Leader Blueprint

BCG’s approach to implementing RAI is based on a pragmatic yet holistic framework that supports companies’ efforts to comply with the proposed EU AI Act and comparable regulations. Once an RAI program is put in place, it also reduces nonregulatory risks, helps seize opportunities for growth, and ensures that AI embodies a company’s corporate values. Moreover, a well-defined AI compliance structure facilitates a nimbler response to change and uncertainty in the regulatory environment.

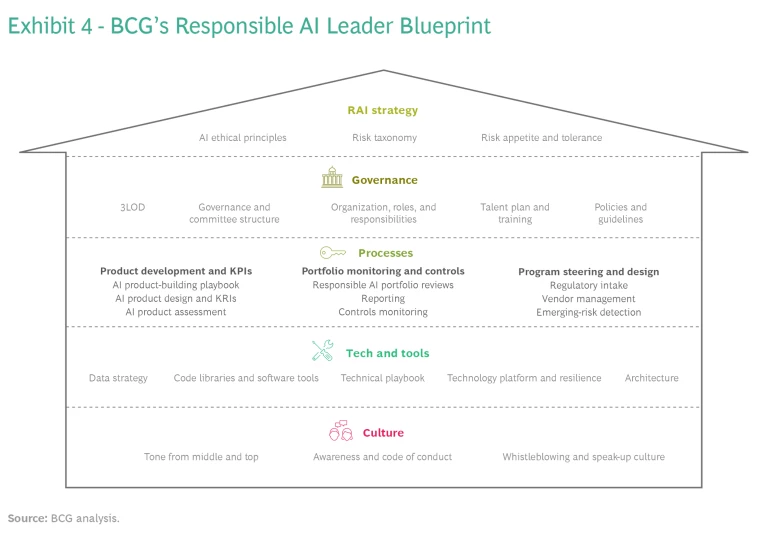

BCG’s holistic Responsible AI Leader Blueprint consists of five dimensions: RAI strategy, governance, processes, tech and tools, and culture. (See Exhibit 4.)

RAI Strategy. A strategic plan makes the goals of RAI transparent, helping leaders throughout the organization to engage with and support those objectives. It also establishes a values-based foundation upon which the other components of the AI Blueprint can be built.

Governance. A governance structure details clear roles, expectations, and accountability for the design, development, and use of AI. It also describes how AI governance will be integrated with the broader risk management framework of the organization.

Processes. Key procedures operationalize the strategy, ensuring that its goals and principles reach the working teams and are implemented on a practical level. AI product review and monitoring, portfolio monitoring and controls, and overall program steering ensure that RAI criteria are being met.

Tech and Tools. The technical environment also needs to support the RAI strategy. Data strategy, code libraries and software tools, and technical guidelines must be in line with an organization’s RAI ambitions.

Culture. The right culture needs to be instituted throughout the organization so that AI compliance risks can be identified as early as possible and handled effectively. The importance of RAI must be highlighted in a company’s AI code of conduct. (See, for example, BCG’s AI Code of Conduct.) Employees need to feel empowered to openly raise any concerns to their leaders, fostering a “speak-up” culture.

Subscribe to our Artificial Intelligence E-Alert.

Four Immediate Steps to Implement and Operationalize RAI

To meet the requirements of the upcoming regulation, companies must quickly translate the Responsible AI Leader Blueprint into action. There are several important actions organizations can take now to protect their business and assume a position at the forefront of RAI, even before the EU AI Act is approved. These measures require limited financial and time investment but produce a substantial impact—they position organizations to begin implementing and operationalizing RAI effectively. Here are the four recommended next steps:

- Establish RAI as a strategic priority with senior-leadership support. Set the tone from the top by communicating RAI priorities across the company and fostering ethical leadership.

- Set up and empower RAI leadership. Identify accountable owners for RAI implementation and give them the power to develop a comprehensive program.

- Promote RAI awareness and culture. Create and integrate an AI Code of Conduct with a clear connection to the company’s overall purpose and values, and nurture a speak-up environment in which issues can be identified, raised, addressed, and monitored in a timely manner.

- Conduct an AI organizational-maturity assessment. Review the current starting point of the organization—its AI development and corresponding RAI standards, controls, and tools—to inform the future RAI strategy.

Seizing the First-Mover Advantage

The EU AI Act is likely to be followed by similar regulations throughout the world, all helping to shape the definition and practical implementation of RAI. The so-called Brussels effect—referring to the significant global impact of EU regulations—continues to cast its spell.

To get a jump on the expected raft of forthcoming regulations, organizations looking to be early leaders in this area should implement a holistic and pragmatic RAI governance and compliance framework. This will not only protect them but also enable them to take full advantage of the AI era.