Today’s AI agents have the potential to revolutionize business processes across the board. Our experience shows that recent advances in computing power and the rise of AI-optimized chips can reduce human error and cut employees’ low-value work time by 25% to 40%—and even more in some cases. These agents work 24/7 and can handle data traffic spikes without extra headcount. And the AI-powered workflows they create can accelerate business processes by 30% to 50% in areas ranging from finance and procurement to customer operations.

Stay ahead with BCG insights on digital, technology, and data

AI agents are not just improving workflows; they’re redefining how businesses operate. Recent breakthroughs in deep learning, generative AI, and autonomous systems, augmented by a proliferation of enterprise data from digital devices and collaboration tools, have dramatically increased their ability to handle complex decision making in real time. As a result, these intelligent virtual assistants are transforming core technology platforms like CRM, ERP, and HR from relatively static systems to dynamic ecosystems that can analyze data and make decisions without human intervention, optimizing and adapting instantaneously.

But implementing these new agents is a complex process. It requires system interoperability, high-quality data, redesigned enterprise platforms, and agentic designs with the right level of autonomy, constraints, and goals. To meet these challenges, BCG has developed a step-by-step playbook for agent-based workflow transformation.

Driving Value with Agentic AI

While both traditional workflow automation and agentic AI focus strongly on efficiency, AI agents are also capable of intelligence, adaptability, and continuous learning. They can take autonomous, goal-directed actions and process and optimize workflows at an unprecedented rate, without latency issues.

Soon AI-powered, interconnected agents embedded into workflows will even be able to adapt dynamically to changes in the environment, detecting and fixing issues independently and then moving to prevent them from happening again. An agent working with SAP to manage the supply chain, for example, might notice that costs are increasing and trigger its finance platform to reassess forecasts.

This dynamic adaptability means companies that embrace agentic AI now will gain a competitive edge in productivity, responsiveness, and innovation.

How Organizations Are Using Agentic AI to Make an Impact

Businesses across industries and functions are integrating agentic AI into a variety of workflows, sometimes leveraging platforms such as Salesforce’s Einstein AI and AgentForce platforms, which use predictive analytics and automation to enhance sales, marketing, and customer service workflows. In addition, ServiceNow’s AI agents and Now Assist capabilities are automating IT, HR, and operational processes, reducing manual workloads by up to 60%. Workflows that are successfully using agentic AI are even found in areas such as finance and fraud detection. The results are impressive, including the following:

- Workflow Orchestration in ERP/CRM Platforms. AI agents are auto-resolving IT service tickets, rerouting supplies to cover inventory shortages, and triggering procurement flows, all without the need for human input. Early adopters are seeing 20% to 30% faster workflow cycles and significant reductions in back-office costs.

- Customer Service and Case Management. AI agents are handling insurance claims from end to end, including document validation, triage, and escalation or payout. As a result, claim handling time has been cut in some instances by 40%, and net promoter scores—a measure of how likely a customer is to refer an insurer to an acquaintance—have increased by 15 points (on a scale that ranges from –100 to 100).

- Sales and Marketing Automation. AI-driven campaign managers are testing, adapting, and optimizing consumer touchpoints in real time. One B2B SaaS firm experienced a 25% increase in lead conversion after implementing agentic campaign routing.

- Finance and Risk Monitoring. AI agents are autonomously detecting anomalies, forecasting cash needs, and recommending reallocation across accounts. As a result, risk events have been reduced by 60% in pilot environments.

Implementing Controls and Governance Around AI Agents

This complex new digital technology also creates hazards. It naturally increases the potential for cybersecurity threats, since it creates new or expanded attack surfaces that malicious actors can leverage; for instance, by hijacking or misrouting AI agents. Potential bias is also a well-known AI concern, along with governance challenges, as without oversight, even well-constructed agents can go off course.

Companies must therefore find the right balance between AI autonomy and human control. Too much leeway leads to risk, while too little renders the agent ineffective. This is especially true in the early days of implementation, which should include constant monitoring and clear guardrails, escalation paths, and feedback loops, all of which require thoughtful design and tooling.

In addition, businesses often fail to assign responsibility for autonomous AI agents, meaning that no one has clear accountability if something goes wrong. Explainability and auditability are therefore crucial, especially within regulated industries. Decisions made in a black box will pose both legal and reputational risk.

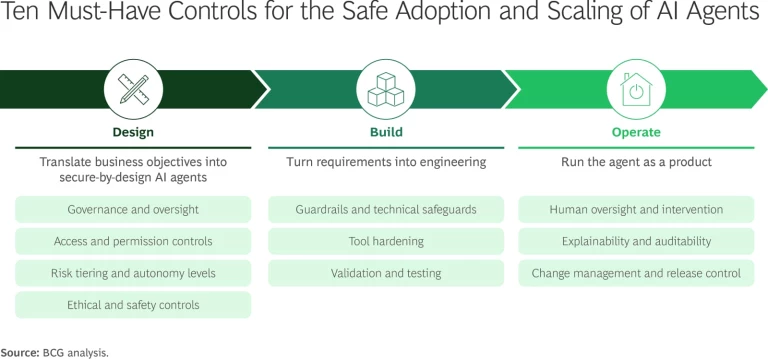

To scale AI agents safely and credibly, companies should adopt a coherent set of controls across the value chain, embedding them from day one rather than adding them after incidents occur. (See the exhibit.) These controls cannot be afterthoughts. They must inform scope, architecture, and operating habits to create clear accountability and prevent small errors from compounding. The following sections outline these controls by phase: design, build, and operate.

Design. Organizations should translate business objectives into a secure-by-design AI-agent concept with explicit ownership, least-privilege access, clear autonomy thresholds, and hard ethical boundaries.

Organizations should translate business objectives into a secure-by-design AI-agent concept with explicit ownership, least-privilege access, clear autonomy thresholds, and hard ethical boundaries.

- Governance and Oversight. Companies should create a virtual control tower that tracks every AI agent deployed and assigns each agent a clear owner. For example, a retail company could assign a manager for an AI that’s responsible for customer refunds, ensuring that the manager reviews any unusual refunds over $500.

- Access and Permission Controls. Businesses should treat agents as they would new employees, giving them access to only what they need. For instance, a fintech startup testing an AI chatbot on internal data might realize that the AI can query payroll, but if the startup has correctly established purely role-based access, the agent will only be able to see realistic test data in an isolated “sandbox" environment.

- Risk Tiering and Autonomy Levels. Companies should classify each agent and its actions, set monetary and operational thresholds, require approvals or dual control for high-impact moves, and cap agents’ daily spending. For example, an agent should be able to make automatic refunds only up to a defined limit, with manager approval above that amount and a daily budget ceiling.

- Ethical and Safety Controls. The company should bake in its values as hard rules. For instance, a media company could ensure that its content-generation AI has a permanent block on political endorsements so the agent never creates candidate profiles that could be misinterpreted as bias.

Build. Businesses should turn their requirements into engineering through guardrails, sandboxing, logging, and rigorous validation so that each agent is safe, observable, and resilient before real users see it.

- Guardrails and Technical Safeguards. Companies should always include a kill switch, like a fire extinguisher one hopes will never be needed. A health care provider, for example, might hit the kill switch if an AI scheduling agent started double-booking critical MRI machines.

- Tool Hardening. Businesses should not just connect tools but wrap every action in strict schemas and safe defaults so that mistakes don’t cascade. This should include “allow” lists, input checks, timeouts, and spending caps. For example, when a procurement agent touches the ERP system, the schema should force the use of valid supplier IDs and currency, cap amounts, and block free-text writes—stopping risky transactions before they occur.

- Validation and Testing. Businesses should run “red team” drills, assigning a group to try to trick the AI into doing something harmful, just as banks hire “ethical hackers.” For instance, the group might try convincing the procurement AI to approve absurd purchases, such as a $10,000 espresso machine. In addition, businesses should use sandbox testing as one might train a new driver: letting them drive only in the parking lot until they’re ready to go onto the highway.

Operate. Companies should run the agents as products with empowered human oversight, monitoring and logging, explainability, and disciplined change management.

- Human Oversight and Intervention. Humans should not just be in the loop, aware of all the agents’ activities, but alert and empowered to intervene as well. For example, a price-adjusting AI might accidentally drop prices to $0.01 overnight if the night shift has no clear process to stop it. To fix this flaw, a company could have trained staff with override authority on duty 24/7.

- Explainability and Auditability. Businesses should log all decisions and rationales so that, should something go wrong, operators and auditors can reconstruct what happened and why. This is essential in regulated industries and reduces legal and reputational risk from opaque black-box behavior.

- Change Management and Release Control. Companies should adopt structured change-management practices for AI agents to ensure that every update to prompts, tools, data sets, or policies is version-controlled and traceable. And before wide release, companies should run shadow rollouts, deploying the new version in parallel to monitor its performance against the old system without affecting operations. In addition, a tested rollback plan must be in place so that if issues arise, the company can quickly revert its systems to a stable version.

Before wide release, companies should run shadow rollouts, deploying the new version in parallel to monitor its performance against the old system without affecting operations.

For instance, an industrial goods manufacturer deploying an AI agent for predictive maintenance on factory equipment could first shadow-test a new model on a limited set of machines. If the updated agent began over-predicting failures, causing unnecessary downtime or maintenance costs, the company could immediately roll back to the previous version while investigating the root causes. It could thereby capture performance improvements without introducing hidden risks to production output.

Confronting Implementation Challenges

The three biggest challenges that companies encounter as they take on agentic AI transformations center on talent, quick wins, and the use of legacy technologies.

Finding and Developing the Right Talent. Agentic AI requires a mix of advanced technical talent such as AI-prompt engineers, AI or machine language specialists, and data engineers as well as business translators who can map AI use cases to workflows. Most organizations underestimate this need. For example, an insurer that is setting up an AI innovation team may initially staff it with only the existing data-scientist team; however, it will quickly realize it needs domain experts who understand claims processing in depth and must embed them into the team to gain traction.

Delivering Early Value to Build Momentum. Large AI programs often stall because they aim too high at the start. Executives want to see tangible benefits quickly; without early proof points, investment and enthusiasm can fade. One company tried to launch an enterprise-wide “AI assistant for every employee” campaign, but the scope was too broad, and progress was slow. As a result, the company pivoted to a much narrower first win: an AI agent that automated vendor onboarding. Within three months, it had cut onboarding time by 40%, giving leadership the confidence to fund broader use cases.

Integrating with Legacy Technologies. Many companies run on complex, decades-old infrastructure that was not designed to support autonomous AI agents. Integration with such legacy technology can result in infrastructure that is brittle, expensive, and slow. Companies should therefore use AI as a smart middleware layer that translates between modern agent interfaces and legacy systems; for example, as large-language-model-powered connectors that auto-generate APIs from old codebases. In some cases, businesses might wrap legacy workflows with AI-driven automation so that agents can operate through existing user interfaces and processes, delivering quick wins without the need for deep re-platforming.

Real Transformation Means AI-First

AI doesn’t just automate workflows—it transforms them. As enterprises move beyond AI-augmented workflows and toward AI-orchestrated execution, they’ll set goals such as autonomously managed operations, real-time adaptation, and continuously optimized processes with minimal human oversight.

However, effective transformation requires businesses to integrate AI-first workflow execution into their business operations and fully embrace the transformation. This means treating AI as a product—assigning someone design authority over the agents’ processes, implementing control mechanisms, and creating human-in-the-loop fallbacks. Accomplishing this will require structural and cultural change, including:

- Platform Re-Architecture. Moving from static APIs to event-driven or agent-compatible infrastructure, such as adopting a software framework or open agent architectures to help integrate AI agents and legacy technologies.

- Operating Model Shift. Embedding agents into core value chain operations, not just the edge (such as the helpdesk).

- AI Talent Strategy. Hiring or training teams that can design agent ecosystems, not just models.

AI Orchestration Is the Future

AI-assisted processes are no longer enough. The future should be AI-orchestrated—with AI agents learning, adapting, and running enterprise processes in real time. Companies that embrace this change decisively will gain a competitive edge in productivity, responsiveness, and innovation, leading in a landscape where AI no longer just informs decisions, but makes them.