In recent years, artificial intelligence has left the machine room and entered the world of mainstream business. Within the next five years, AI will have a major impact in all industries, according to research conducted by BCG and MIT Sloan Management Review. The research found that more than 70% of executives expect AI to play a significant role at their companies.

Today’s AI algorithms already support remarkably accurate machine sight, hearing, and speech, and they can access global repositories of information. AI performance continues to improve, thanks to deep learning and other advanced AI techniques, a staggering level of growth in data, and continuing advances in raw processing power.

These developments have led to an explosion in AI-enabled business applications, analogous to the Cambrian era, when the development of eyesight contributed to a remarkable worldwide increase in species diversity.

As always, this new era will have winners and losers. But our research with MIT suggests that if current patterns continue, the separation between the two could be especially dramatic and unforgiving. The data revealed markedly different levels of AI understanding and adoption even within the same industry. In insurance, for instance, China’s Ping An Group started AI development five years ago and is now incorporating the technology in various services, while other insurers are just starting to experiment with the simplest applications. Overall, executives from many companies reported that their organizations lacked a basic

Although elements of AI are available in the market, the hard work of managing the interplay of data, processes, and technologies happens in-house.

As a starting point, this report aims to provide an intuitive and practical comprehension of AI. (See “Ten Things Every Manager Should Know About Artificial Intelligence”) At a deeper level, it also discusses many current and potential use cases for AI and examines the impact of AI on industry value pools, the future of work, and the pursuit of competitive advantage. Finally, it offers some practical guidance on how to introduce and spread AI within large organizations.

AI Is Not an Off-the-Shelf Solution

AI is not plug and play. Companies cannot simply “buy intelligence” and apply it to their problems. Although elements of AI are available in the market, the hard work of managing the interplay of data, processes, and technologies happens in-house.

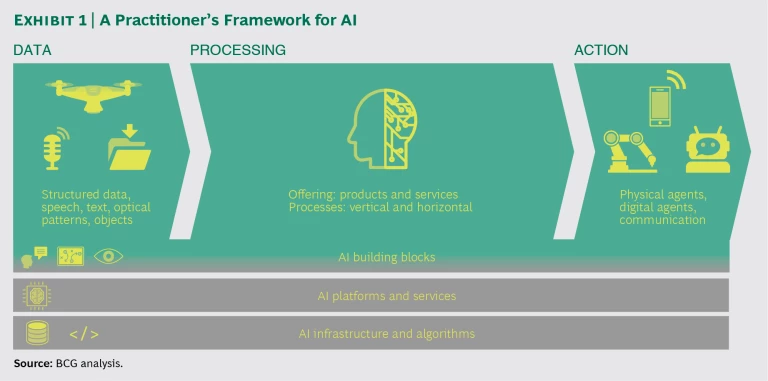

The conceptual framework for putting AI to work is fairly intuitive. (See Exhibit 1.) In a nutshell, AI algorithms absorb data, process it, and then generate actions. The process depends on proper integration of several layers of technology, however, and identifying a specific path from data to action often bedevils companies.

From Data to Action

The data processing needs of AI differ from those of big data or traditional data analytics in a couple of fundamental ways:

- Data, Training, and Processing. Naked AI algorithms are simple lines of computer code. They are not natively intelligent, and they require sensory input and feedback in order to develop intelligence. For the foreseeable future, AI training will require company-specific data and effort. Data scientists must feed machines lots of data to properly weight countless correlations and connections, ultimately creating an algorithm whose intelligence is limited to that specific realm of data. This classic inductive approach to learning explains why AI is often described as data-hungry.

- Action. A trained algorithm can accept live data and deliver actions—a credit score decision and its automated delivery to a customer, for example, or a cancer diagnosis based on a medical image, or a left-hand turn into oncoming traffic by a driverless car. Although this data-to-action process does not differ from the workings of standard computer programs, an AI system continues to learn and transform itself. The data is thus a source of both action and self-improvement—as it is for a business executive, who makes decisions based on facts and uses those facts to refine future decisions.

Setting up the data-to-action process is hard work. Companies cannot effectively buy it in the marketplace, and those that try to avoid the work or take shortcuts will be disappointed. The MIT Sloan Management Review article, which BCG co-authored, cites one pharma executive who described the products and services that AI vendors provide as “very young children.” The vendors “require us to give them tons of information to allow them to learn,” he said, reflecting his frustration. “The amount of effort it takes to get the AI-based service to age 17 or 18 or 21 does not appear worth it yet. We believe the juice is not worth the squeeze.”

For the foreseeable future, most companies will need to rely on internal data scientists to find, collect, collate, and create data sources and to develop and train company-specific AI systems. Companies can, of course, outsource an entire process or activity—such as an HR task—together with all of the data, to a service provider. But by outsourcing such responsibilities to a vendor that serves multiple clients, companies forfeit any chance to gain a competitive advantage.

The Foundations of AI

Fortunately, companies do not need to develop all of their AI machinery internally. Supporting platforms and services are available in the market. Companies can rent raw computing power in the cloud or deploy it on the premises with specific hardware that can process many tasks in parallel—an essential capability for AI techniques such as deep neural networks. Companies can also access rapidly developing AI data architectures based on open-source code. Most cutting-edge AI algorithms are available in the public domain, and leading scientists have pledged to continue to publish and open-source their work on these algorithms. In addition, businesses have made AI platforms, such as Google’s TensorFlow, available as a service.

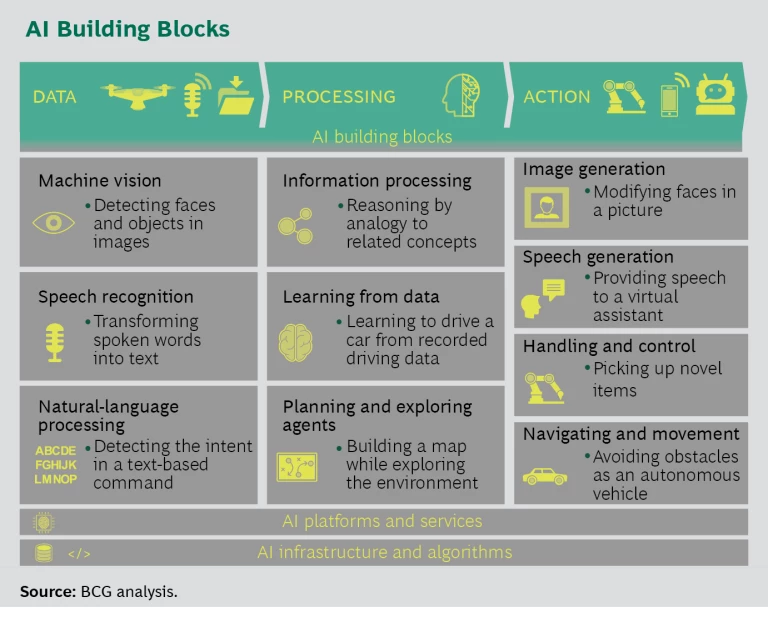

Companies can also access what we call AI building blocks. These building blocks, such as machine vision, are more functional than a naked algorithm but are not fully self-operational. Every use of AI depends on one or more of these building blocks, and each block relies on a collection of algorithms, APIs, and pretrained data. On the basis of our research and experience, we have identified ten building blocks, all of which are undergoing rapid development. (See “The Building Blocks of Artificial Intelligence.”) Executives need to understand the capabilities and potential value of these building blocks. What is hard but doable today will likely be easy in a few years, and the impossible today may be possible in three to five years.

For companies that want to be on the leading edge, however, the marketplace may not always offer the best options. When Ping An wanted to take advantage of facial recognition software, it was dissatisfied with the performance of the products available on the market—so it built its own. The resulting in-house system recognized the contours and characteristics of Chinese faces better than commercial alternatives did, and the tool has already verified more than 300 million faces in various uses. It complements Ping An’s other cognitive systems—such as voice and image recognition. The insurer’s experience suggests that all companies should assess their ability to use AI building blocks to gain competitive advantage.

Artificial Intelligence in Action

Significant adoption of AI in business remains low: only 1 in 20 companies has extensively incorporated AI, according to our survey with MIT. Nevertheless, every industry includes companies that are ahead of the pack. And even if no single company has achieved AI excellence across all functions, many are using AI to create substantial business value. The following uses, drawn from several industries and involving various organizational functions and processes, demonstrate how pervasive AI already is and how effective it can be in the right setting.

Marketing and Sales

AI gives companies the opportunity to offer customers personalized service, advertising, and interactions. The stakes are huge. Brands that integrate advanced digital technologies and proprietary data to create personalized experiences can increase revenue by 6% to 10%—two to three times the rate among brands that don’t. (See “Profiting from Personalization,” BCG article, May 2017.) In retail, health care, and financial services alone, BCG estimates, $800 billion in revenue will shift toward the top 15% of personalization companies during the next five years.

Brands that integrate advanced digital technologies and proprietary data to create personalized experiences can increase revenue by 6% to 10%.

Many best practices of successful personalization have emerged in fast-moving retail environments. One global retailer, for example, used a loyalty app’s smartphone data—including location, time of day, and frequency of purchases—to gain a deep understanding of its customers’ weekly routines. By combining millions of individual data points with information on general consumer trends, the retailer built a real-time marketing system that now delivers 500,000 custom offers a week.

In some sales-and-marketing organizations, AI augments rather than automates processes. For example, a multiline insurer relied on machine learning to segment its customers in order to recommend “next best offers”—offers positioned at the intersection of a customer’s needs and the insurer’s objectives—to the company’s sales agents. To accomplish this, the insurer built a model of the insurance needs of customers as they pass through various life stages. The model relied on complex algorithms that crunched more than a thousand static and dynamic variables encompassing demographic, policy, agent tenure, and sales history data. As a result, the insurer could match particular policies with individual members of specific clusters. The system has the potential to increase cross-selling by 30%. The insurer can also use the machine learning system to improve sales optimization efforts by processing geographic, competitive, and agent performance data.

Such examples demonstrate the effectiveness of AI in decentralized settings—such as retail or financial services sales—that benefit from a rich supply of contextual and specific customer data. Properly constructed pilot projects can generally validate a proof of concept within four to six weeks and help determine the data infrastructure and skill building necessary for a full rollout.

Research and Development

Compared with marketing and sales, R&D is a less mature field for AI. R&D has generated far less data than large retail chains have, and it has often failed to capture this data digitally. In addition, many R&D problems are complex, deeply technical, and bounded by hard scientific constraints. Even so, AI has high potential in this field. For instance, in the biopharmaceutical industry, where R&D is the primary profit driver, AI could reverse the trend toward higher costs and longer development times.

Citrine Informatics, an AI platform designed to accelerate product development, illustrates one way to meet the challenge of limited data. Most published studies have a bias toward successful experiments and, potentially, the interests of the funding organization. Citrine overcomes this limitation by collecting unpublished data through a large network of relationships with research institutions. “Negative data are almost never published. But the corpus of negative results is critical for building an unbiased database,” said Bryce Meredig, Citrine’s cofounder and chief science officer. This comprehensive approach has enabled the company to cut R&D time for its customers by one-half for specific applications.

Within the industrial goods sector, leading manufacturers combine AI, engineering software, and operating data—such as repair frequency—to optimize designs. AI is especially helpful in developing designs for additive manufacturing, also called 3D printing, because its algorithmically driven processes are unconstrained by engineering conventions.

Aggressive forms of data collection should be a key element in the design of AI pilots in R&D. It may be necessary to collaborate with universities, digitize old records, or even generate new data from scratch. Given the knowledge and expertise required to engage in R&D, useful turnkey AI solutions will rarely be available. Instead, scientists must rely on systematic trials for guidance in building the data inventory they need for future AI applications.

R&D has generated far less data than large retail chains have, and it has often failed to capture this data digitally.

Operations

Operational practices and processes are naturally suited for AI. They often have similar routines and steps, generate a wealth of data, and produce measurable outputs. Many AI concepts that work in one industry will work in another. Popular current uses of AI include predictive maintenance and nonlinear production optimization, which analyzes a production environment’s elements collectively rather than sequentially or in isolation.

An oil refinery wanted to predict and avoid breakdowns of an important gasifier unit responsible for converting residual products of the refining process into valuable synthesis gas used in generating electricity. An unplanned outage of that unit forces a costly suspension of electricity generation for a month. Although the refinery had accumulated plenty of data about ongoing operations, it had no clear understanding of what specific factors drove the unit to break down. Conventional engineering models could not fully describe the complex interdependencies that existed among more than a thousand variables that might lead to failure.

Working closely with data scientists, the refinery’s engineers turned to artificial intelligence to determine the cause of the breakdowns, feeding six years’ worth of operational data and maintenance information through a machine learning algorithm. The AI model successfully quantified the impact of all key factors (including feedstock type, output quality, and temperature) on overall performance. Engineers were then able to gauge whether the unit would continue to run between episodes of planned maintenance.

Relying on insights that the machine learning algorithm generated, engineers designed a transparent, rules-based system for adjusting key operational settings for variables such as steam and oxygen to enable the unit to keep running between scheduled maintenance periods. This system minimizes the risk of unplanned shutdowns of the unit and reduces the number of short-term changes in the maintenance schedule, yielding significant economic benefits.

Predictive maintenance solutions also work for humans. A US insurer receiving fixed payments from Medicare wanted to use AI to reduce avoidable visits to the doctor or to hospitals by Medicare patients. The insurer fed data from medical histories, such as adverse reactions to drugs, and case managers’ notes into a machine learning system. The system devised an intelligent segmentation of customers and provided useful insights into preventive action. For instance, the recent loss of a patient’s spouse proved to be highly predictive of future medical intervention and the need for preventive care. These insights allowed the payer to redesign programs to achieve potential annual savings of $650 million.

Moving beyond maintenance, a smelter operator used AI and nonlinear optimization to improve the purity of its copper, which engineers had spent years trying to do. Working with a team of data scientists, the engineers fed five years of historical data into a neural network. The system suggested production changes that resulted in a 2% increase in purity, an improvement that doubled the smelter’s profit margins. The exercise took six weeks and did not require additional capital or operational spending.

Procurement and Supply Chain Management

In procurement, AI’s potential is substantial—because structured data and repeat transactions are common—but largely unrealized. Machines today can beat the top poker players in the world and can trade securities, but they have not yet shown the ability to outsmart vendors in corporate purchasing—at least publicly. (Companies may be using AI-enabled procurement systems but not telling their suppliers, or anyone else, in order to maintain a competitive edge.) The known examples of AI in procurement involve chatbots; semiautomated contract design and review; and sourcing recommendations based on analysis of news, weather, social media, and demand. Significant augmentation or even automation of sourcing is only now emerging.

Machines today can beat the top poker players in the world, but they have not yet shown the ability to outsmart vendors in corporate purchasing—at least publicly.

Supply chain management and logistics are a different story. Historical data is readily available for these processes, making them a natural target for machine learning.

A global metals company recently built a collection of machine learning engines to help manage its entire supply chain, as well as to predict demand and set prices. The company integrated more than 40 data warehouses, ERP systems, and other reporting systems into one data lake. As a result of these changes, the systems can now identify and predict the way complex and opaque demand patterns ripple throughout the supply chain. For example, a shift in the US corn harvest by a single week has global repercussions along the supply chain for aluminum, a common packaging material for corn. The company’s initiative helped improve its customer service levels by 30% to 50%. The company is also set to achieve a 2% to 4% increase in profit margin within three years and a reduction in inventory of between four and ten days within two years.

This example highlights the importance of data, data preparation, and data integration in bringing AI to life. It takes far more time to collect data and build the data infrastructure than to build the machine learning model.

It takes far more time to collect data and build the data infrastructure than to build the machine learning model.

Support Functions

Companies often partially outsource support functions, which tend to be similar across organizations. But soon they may be able to buy AI-enabled solutions for these processes. Heavy AI development is underway at outsourcing giants such as IBM, Accenture, and India’s Big Four players (HCL, Infosys, Wipro, and Tata). These companies are shifting focus from emphasizing lower labor costs and scale to building intelligence and automation platforms in order to offer higher-value services.

Many service organizations are starting to recognize the benefits of combining AI with robotic processing automation (RPA). They are using rules-based software bots to replace human desk activity and then adding flexibility, intelligence, and learning via AI. This approach combines the rapid payback of RPA and the more advanced potential of AI. (See “Powering the Service Economy with RPA and AI,” BCG article, June 2017.)

To replace human tasks, an Asian bank installed RPA and AI systems that learned on the fly. These systems routed cases to human workers only when they were uncertain about what to do, enabling the bank to reduce costs by 20% and decrease the time devoted to certain processes from days to minutes.

AI in Product and Service Offerings Unlike most of the prior examples, AI applications that involve advanced product and service offerings—digital personal assistants, self-driving cars, and robo-investment advisors, for example—tend to receive a lot of attention. Companies that offer AI-enabled services are eager to demonstrate to the public the competitive performance and features of these offerings.

Because their products and services and potentially their entire business models are at stake, companies must build strong internal AI teams. This helps explains the fierce competition for AI talent among technology vendors, car manufacturers, and suppliers. In the auto industry, for example, Bosch is investing €300 million over the next five years to establish AI facilities in Germany, India, and the US. “Ten years from now, scarcely any Bosch product will be conceivable without artificial intelligence. Either it will possess that intelligence itself, or AI will have played a key role in its development or manufacture,” said Volkmar Denner, the company’s CEO.

At the same time, automation creates new business models. Insurers and manufacturers, for example, will be able to use AI to predict risk with greater accuracy, allowing them to price on the basis of use, care, or wear. (For an examination of the use of AI across an entire industry value chain “Getting Real with Insurance.”)

GETTING REAL WITH INSURANCE

GETTING REAL WITH INSURANCE

As the exhibit indicates, specific uses may include anything from insurance for automobiles directed by sensors and tracking devices to policies that protect against cybersecurity risks. In creating these new offerings, insurers should combine the modules outlined in the sidebar “The Building Blocks of Artificial Intelligence” with available data sources. For example, insurers might mash together machine vision and satellite data to automate quotes on property insurance.

In dealing with core processes, it is helpful to break down a discrete process, such as sales and pricing, to identify specific use cases. To select the best insurance for a customer, an insurer can combine machine learning and historical customer and sales-agent data to recommend the next best offer. Within fraud detection, some startups are already beginning to use photographs and machine vision to spot potential risks. Finally, insurers may be able to improve risk management by feeding customer data, such as sensor-monitored driving behavior, into a machine learning engine.

Many support (or back-office) processes are similar across industries, and vendors even offer some customer-facing solutions such as chatbots. In this field, vendors are likely to have more experience and may be able to take advantage of their scale by analyzing sanitized data from customers. In setting up these processes, companies should be aware of what other sectors are doing. For example, Goldman Sachs conducts its first round of employee interviews with AI-powered video technology from a startup; and Cyclance’s cybersecurity solution for IT recognizes malware, such as the recent WannaCry ransomware, in milliseconds.

Insurance depends quite heavily on structured data, so it is not surprising that artificial intelligence developers can build many use cases with the building block we call “learning from data.” As time goes on, however, the machine vision, speech recognition, and natural-language processing building blocks will become increasingly helpful in making sense of unstructured data, and speech generation will assist in standard written communication.

Beyond the Company: How Industry Value Pools Could Shift

Collectively, use cases and potential scenarios can influence entire industry structures. Self-driving cars, for example, will affect not just car manufacturers but also drivers, fleet owners, and traffic patterns in cities. The city of Boston has determined that self-driving vehicles could reduce both the number of vehicles in transit and the average travel time by 30%. Parking needs would fall by half, and emissions would drop by two-thirds. (See Self-Driving Vehicles, Robo-Taxis, and the Urban Mobility Revolution, BCG report, July 2016.)

Health care offers another dramatic example. It consists of several sectors, including medical technology, biopharmaceuticals, payers, and providers, each with distinct and often competing interests. The industry is the scene of rampant AI experimentation across the value chain, particularly in the areas of R&D, diagnostics, care delivery, care management, patient behavior modification, and disease prevention.

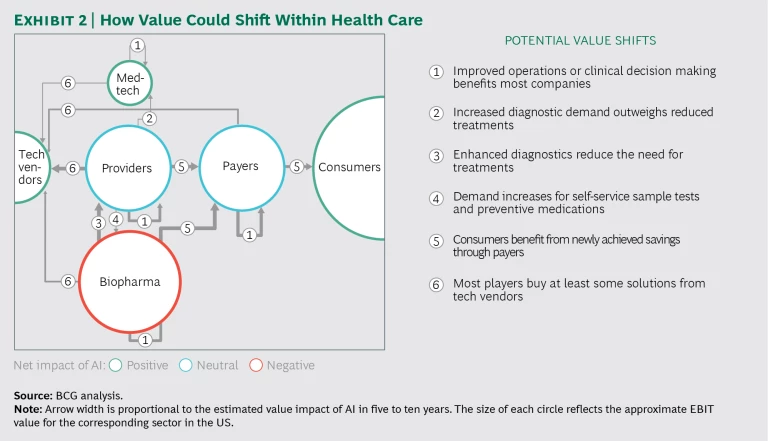

Exhibit 2 illustrates one potential scenario for how overall health care value pools may shift with the increased adoption of AI. Of course, value shifts for individual players within sectors will vary, and there will be winners and losers in each sector. Initially, most companies will benefit from the incorporation of AI into internal operational processes (the arrows labeled 1 in Exhibit 2). Biopharma companies and payers are likely to gain the most from these efforts because they can take advantage of R&D efficiencies, personalized marketing, and streamlined support functions.

Over the next five years, we expect AI to gain significant traction in diagnosing illnesses. Visual AI agents already outperform leading radiologists at diagnosing some specific forms of cancer, and many startups and tech giants are working on AI-enabled methods to detect cancer even earlier and to provide ever more accurate prognoses. In the primary-care setting, AI can improve or replace some physician interactions. Meanwhile, remote diagnostics can eliminate or drastically reduce the number of patient visits to the hospital for some conditions. These changes are likely to primarily benefit medtech companies (arrow 2), while possibly hurting biopharma companies (arrow 3) and to some extent providers (arrow 4), as better, earlier diagnoses and methods of prevention reduce demand for treatment.

With its intrinsic performance metrics, artificial intelligence will likely accelerate the trend toward value-based health care—the practice of paying for outcomes rather than volume. This trend should benefit consumers as payers pass along savings and set new rates for providers and biopharma companies (the arrows labeled 5). Finally, most companies are likely to buy at least some of their AI solutions from technology vendors (the arrows labeled 6), including traditional tech players that enter the health care space.

This possible scenario—which would occur against a backdrop of increasing demand for health care—could improve health outcomes, but biopharma companies could feel the heat. Alternatively, biopharma companies might make bolder moves in diagnostics, and personalized medicine might take off, opening up new profit pools. Furthermore, payers could themselves develop remote diagnostics, while providers start incorporating AI into their patient treatment protocols. In almost any scenario, medtech and technology vendors will profit.

Playing the AI Opportunity to Win

With so much uncertainty surrounding the development of AI, the smartest play for most companies is to develop a portfolio of short-term actions, based on current trends, and to prepare for future opportunities by building up capabilities and data infrastructure. The general approach is somewhat similar to what we advocate in digital strategy, but AI presents some important nuances. (See “The Double Game of Digital Strategy,” BCG article, October 2015.)

How to Get Started

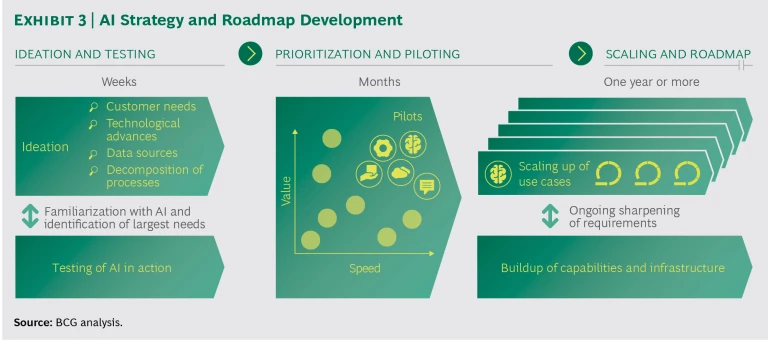

Executives should divide their AI journey into three steps: ideation and testing, prioritizing and launching pilots, and scaling up. (See Exhibit 3.)

- Ideation and Testing. At this stage, companies should rely on four lenses: customer needs, technological advances (especially those involving the AI building blocks), data sources, and decomposition (or systematic breakdown) of processes—to identify the most promising use cases. (See “Competing in the Age of Artificial Intelligence,” BCG article, January 2017.) Customer needs offer crucial guidance in discovering valuable AI uses. The customers may be external or, in the case of support functions, internal. An in-depth understanding of developments in AI building blocks will be critical for systematically incorporating technology advances. Rich data pools, especially new ones, provide another important lens, given AI’s dependence on them. Finally, by breaking down processes into relatively routine and isolated elements, companies may uncover areas that AI can automate. Aside from customer needs, these lenses are quite distinct from those that companies must use to identify digital opportunities.

For companies with limited AI experience, we strongly recommend including a second, parallel testing stage based on a use case that is likely to deliver value, is reasonably well defined, and is only moderately complex. This test will help the organization gain familiarity with AI and will highlight data or data integration needs and organizational and capability hurdles—critical inputs for the next step.

- Prioritizing and Launching Pilots. Executives should prioritize pilots on the basis of each pilot’s potential value and speed of delivery. The testing done in the first step will provide insight into the time requirements and complexity of potential pilots.

Once the organization has selected a final set of pilots, it should run them as test-and-learn sprints, much as in agile software development. Since most pilots will still have to deal with kludgy data integration and processing, they will be imperfect. But they will help correctly prioritize and define the scope of data integration initiatives, and identify the capabilities and scale needed for a fully operational AI process. Each sprint should concurrently deliver concrete customer value and define the required infrastructure and integration architecture.

- Scaling Up. The last phase consists of scaling up the pilots into solid run-time processes and offerings, and building the capabilities, processes, organization, and IT and data infrastructure. Although this step may last 12 to 18 months, the ongoing rhythm of agile sprints should maximize value and limit major, unexpected course corrections.

While pursuing this operational program, executives should be implementing a set of activities to prepare themselves and their organization for the task of putting artificial intelligence to work.

- Understanding AI. Executives need to know the basics of AI and have an intuitive understanding of what is possible. Instead of simply reading accounts in the media of ever new wonders, they could start to experiment with Tensorflow Playground or take instructive and widely available online courses. At their core, algorithms are simple; and beyond the mysterious jargon, the field is quite accessible. For these reasons, executives should be able to develop a functional understanding of the topic.

- Performing an AI Health Check. Executives should have a clear view of their starting position regarding technology infrastructure, organizational skills, setup, and flexibility. In addition, they should understand the level of access to internal and external data.

- Adding a Workforce Perspective. AI could become disruptive to workforces. Although employee concerns about imminent job loss often exaggerate the actual situation, introducing AI does create both emotional stress and the need for large-scale retraining. Think about a factory employee working alongside a robot, a procurement manager receiving input from an app, or a call center agent seamlessly taking over an interaction from a chatbot. Workplace communication, education, and training need to be part of the design from the initial pilots onward.

Thinking Beyond Tomorrow

The future of AI—including the extent of its potential to shift value creation in a radical way—remains highly uncertain. The best way to combat this uncertainty is to plot out and test several scenarios and to create a roadmap tying together the individual initiatives. These efforts will enable companies to sensibly modify their original plan and address its implications with regard to data, skills, organization, and the future of work:

The best way to combat uncertainty about the future of AI is to plot out and test several scenarios and to create a roadmap tying together the individual initiatives.

- Data. Breakthroughs in AI largely depend on access to new, unique, or rich data assets. Fortunately, at least in some fields, machine learning models can start with an initial set of data and improve as they add further data. But since the amount of available data doubles every two years, competitive advantage based on past data is highly perishable—privileged access to future data is essential.

Our joint research project with MIT indicates that data ownership is a vexing problem for managers both across and within industries. For example, survey respondents are divided on the question of whether proprietary data, a mix of public and company-owned data, or public-domain data is most prevalent in their industries. Critically, the amount of exclusivity often determines competitive advantage, which underscores the need for executives to understand in greater depth the value and availability of data sources within their industry and company.

- Skills. Our research with MIT shows that only a small fraction of companies understand the knowledge and skills that future AI will require. And the companies that do have advanced AI capabilities often struggle to hire and retain AI-savvy data scientists. This immediate need will diminish over time as universities and providers of online education expand their AI offerings. Longer term, a more valuable skill may be the ability to manage teams of data scientists and business executives and to integrate AI insights and capabilities into existing processes, products, and services.

- Organization. Companies are divided about whether a centralized, decentralized, or hybrid organizational model offers the best approach to AI, according to our research with MIT. The more critical issue is the need for organizational flexibility and cross-functional teamwork among people with AI and business expertise as the organization’s people and machines work together more and more closely.

Apart from overall organizational design, it is becoming increasingly clear that AI, as a technology, works best in structures that emphasize decentralized actions but centralized learning. This is true whether the application in question is self-driving cars, real-time marketing, predictive maintenance, or support functions within global companies. A central entity collects and processes data from all decentralized agents to maximize the learning set, and then it deploys new models and tweaks to those agents on the basis of centralized learning from the aggregated data pool.

- Future of Work. AI will undoubtedly influence the structure of future work. But despite fears that AI will lead to imminent large-scale job loss, our research with MIT suggests that limited effects will occur in the foreseeable future. Most respondents do not expect that AI will lead to a job reduction at their companies within the next five years. More than two-thirds of the survey participants are not worried that AI will automate their jobs; to the contrary, they have hopes that AI will take over unpleasant tasks that they currently perform. At the same time, almost all the respondents recognize that AI will require employees to learn new skills, much as auto mechanics have had to expand their skill set. The difference is that they will not have decades to adapt, so they may need to leverage new educational offerings and AI itself to speed up the reskilling process. Organizations need to be flexible, but so do employees and executives. The best preparation for long-term success is to build the capacity to change.

AI will fundamentally transform business. Your best chance to succeed is to tune out the hype and do the necessary work. There is no substitute for action.

The BCG Henderson Institute is Boston Consulting Group’s strategy think tank, dedicated to exploring and developing valuable new insights from business, technology, and science by embracing the powerful technology of ideas. The Institute engages leaders in provocative discussion and experimentation to expand the boundaries of business theory and practice and to translate innovative ideas from within and beyond business. For more ideas and inspiration from the Institute, please visit Featured Insights.