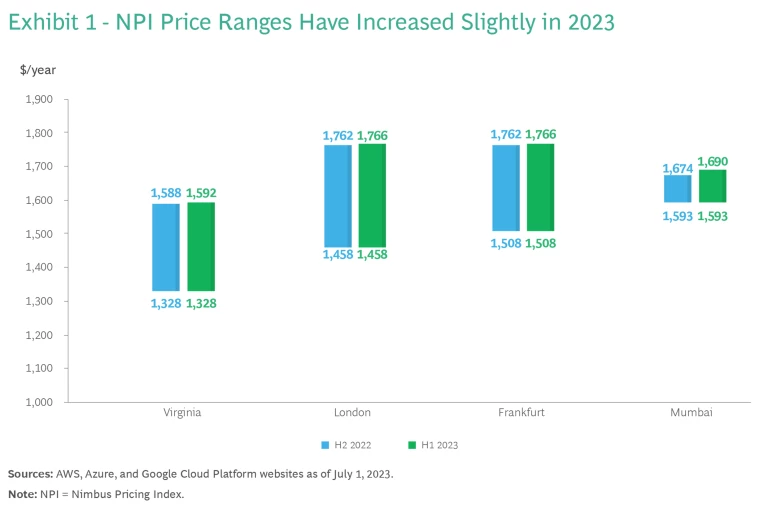

For the first time since the debut of cloud services, prices began to edge up in the first six months of 2023, according to the Nimbus Pricing Index (NPI). Many have wondered if inflationary pressures and weaker revenue growth for cloud service providers (CSPs) would eventually lead to price increases. That time seems to have arrived. Although the impact is still marginal in most geographies, the price increases are significant in some European countries.

About the Series

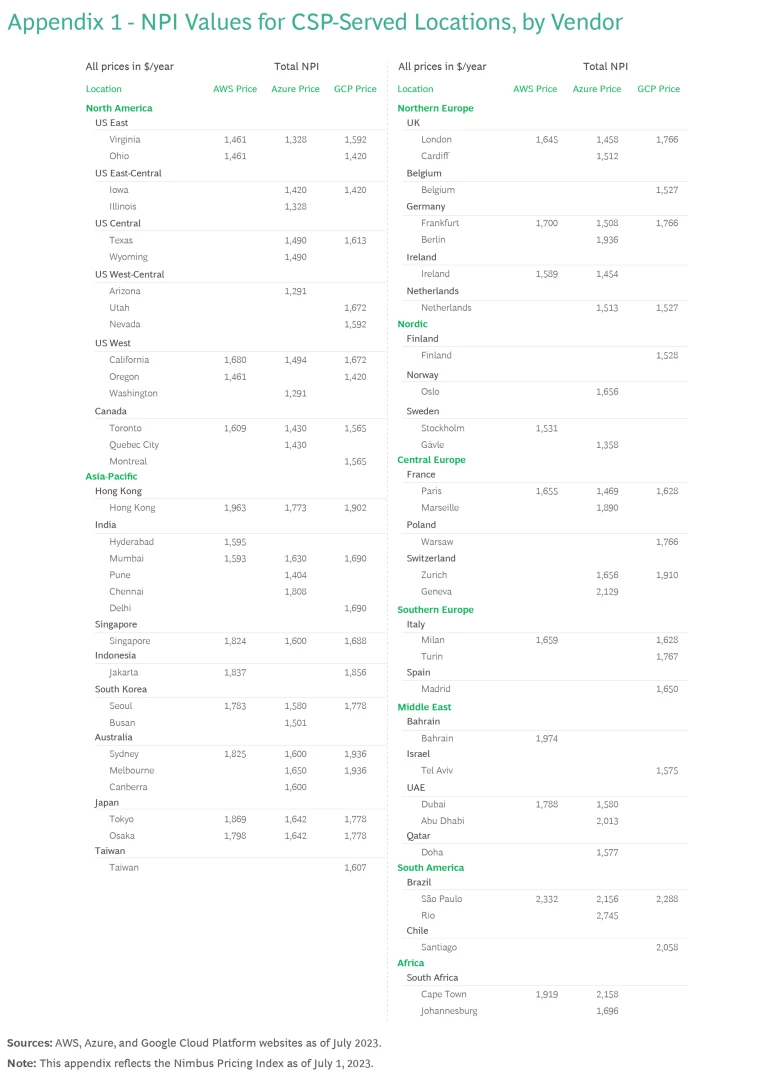

The NPI, which reflects average pricing in US dollars across the three main CSPs, recorded an average increase of 0.3% per price point (in other words, the price offered by one vendor in one location). The largest single increase was about 2% in South America. (See Appendix 1 for the full NPI table.) We also note that price ranges in some locales nudged upward in the first half of 2023 compared to the second half of 2022.

The four locations in Exhibit 1 are hubs where all three major CSPs have an offering that meets the specifications of the NPI. These modest increases indicate that CSPs continue to look for ways to boost revenue without resorting to direct price-list increases—especially in computing power, which is the largest component of most clients’ cloud billing.

But the story is a bit different when you consider pricing in other currencies. Because Azure changed its foreign currency exchange rates for some locations in Europe, billings denominated in euros, pounds, Danish krones, Norwegian krones, or Swedish krona jumped between 9% to 15%. Elsewhere, there have been hikes in network egress pricing for Google Cloud Platform as well as some types of storage in specific locations such as Indonesia and South America.

Generative AI Models: Build or Buy?

GenAI is a white-hot topic across industries, and that holds true for cloud services. Cloud workloads that perform AI training require a more sophisticated set of specifications than workloads constructed for more standard operations. These specifications can include significant memory requirements and often require more specialized computations, including optimized graphics processing units (GPUs).

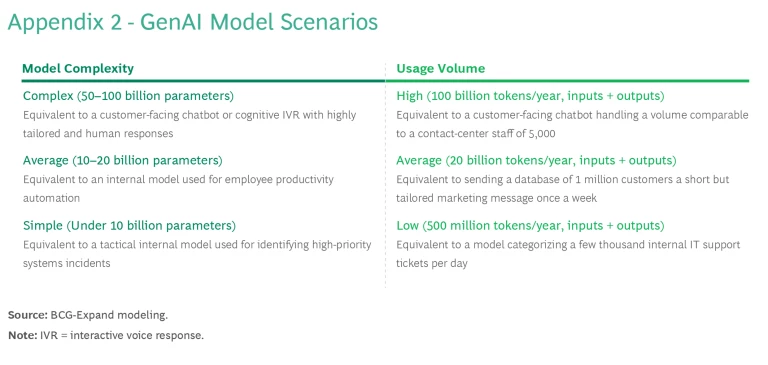

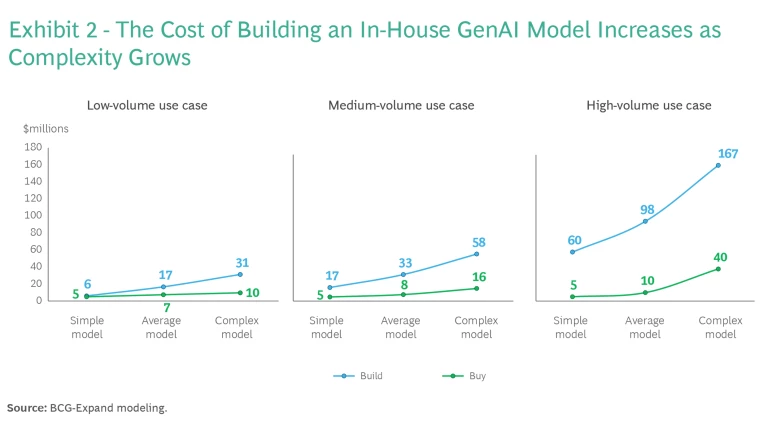

GenAI raises the age-old tech question of whether to build or buy. There are many deployment options available for those looking to utilize GenAI, but in our analysis we chose to compare the two extreme ends of this spectrum: a from-scratch custom model versus a zero-customization vendor option. (See Exhibit 2.)

The models studied ranged from a low-volume, low-complexity model—one that sorts internal IT tickets to find high priority items, for example—to a high-volume, high-complexity model, such as a customer-facing service chatbot for a large firm. This analysis provides us with a range of possible spending that includes computing power, storage, training, API costs and human resourcing. (See Appendix 2 for details on the complexity and volume parameters for model types.) In future installments of Cloud Cover we will analyze other options within this range, such as using open-source models.

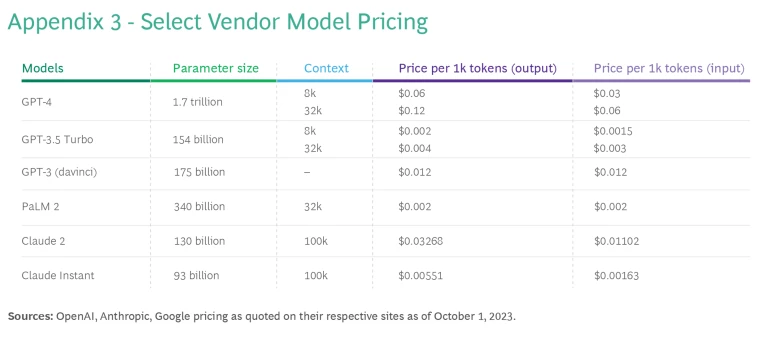

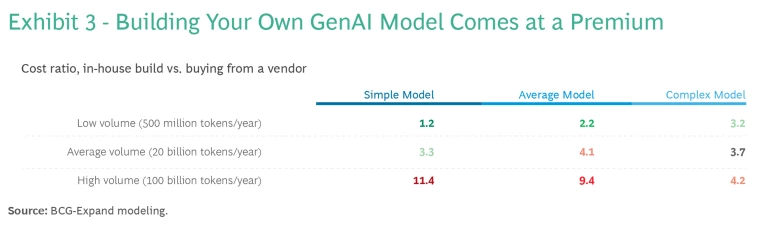

According to our analysis, the vendor solution is always more affordable thanks to significant economies of scale and in-house GPU availability. Still, the decision about whether to build or buy is not always clear cut, especially for the low-volume models. For example, it’s only 1.2 times more expensive to build a low-volume, simple model in-house compared to purchasing an off-the-shelf version. (See Exhibit 3.)

Because off-the-shelf solutions limit customization (and potentially performance), a company might justify building its model in-house. But as volume and complexity rise, the additional spending needed to build in-house is harder to justify—for example, the cost of building a high-volume simple model is 11.4 times that of purchasing an off-the-shelf version.

In most cases, vendor pricing for out-of-the-box models of similar complexity varies. (See Appendix 3 for select vendor pricing.) But the choice of vendor does not heavily impact overall cost, except perhaps for high-volume models, where the API call costs begin to outweigh other costs such as resourcing.

Choosing the Right Architecture

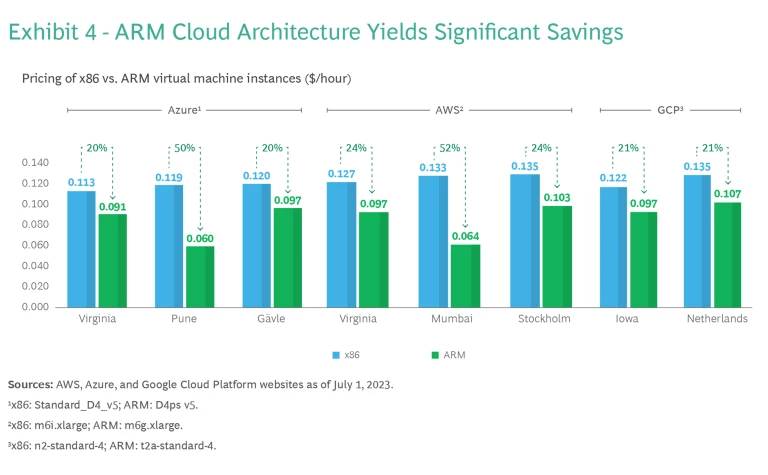

As companies look for ways to keep cloud architecture costs down, more are considering switching their computing architecture from x86 to Advanced RISC Machine (ARM). For many years, x86 has dominated virtual machine deployment because these processors perform well with a high throughput, and because they are deeply embedded in existing hardware. But x86 also consumes significant energy and generates more heat.

For these reasons, CSPs have begun rolling out ARM-based options. ARM processors are mobile chips frequently used in smartphones and other devices where weight and size are key factors. Amazon Web Services introduced its Graviton ARM-based processors in 2018; Azure followed suit with its Ampere Altra processor in 2020 and Google with its Tau T2A virtual machine series in 2022.

Along with their power efficiency (which can assist with ESG commitments) and good performance at a lower cost compared to x86, ARM services are highly scalable and can readily support spikes in usage. Research shows that using ARM does indeed yield savings: in most locations, ARM prices are 20% lower than like-for-like x86 architectures, though the range runs from 19% all the way to 52%. (See Exhibit 4.)

There are, however, downsides to using ARM architectures. They have a simplified instruction set, so while ARMs can calculate quickly, their ability to perform multiple actions simultaneously and run parallel tasks through a single CPU is more limited. Another significant drawback is that firms need to rearchitect existing x86 applications to work on ARM-based virtual machines to achieve optimal performance. But when this rearchitecting occurs, companies can improve performance over x86 equivalents due to ARM’s higher proportion of physical CPUs per vCPU.

Please keep an eye out for the next issue of Cloud Cover, where we’ll continue to develop the NPI and address key questions on cloud services and pricing—including insights on specialist offerings, other CSPs, and price evolution over time.