Companies generate and access very large amounts of data to make highly informed predictions, decisions, and analyses. The challenge for many of these companies, however, is to find the most efficient way to analyze and apply both internal and external data to accomplish specific business goals. The use of cloud services enables architects, developers, and solution owners to improve their ability to leverage data.

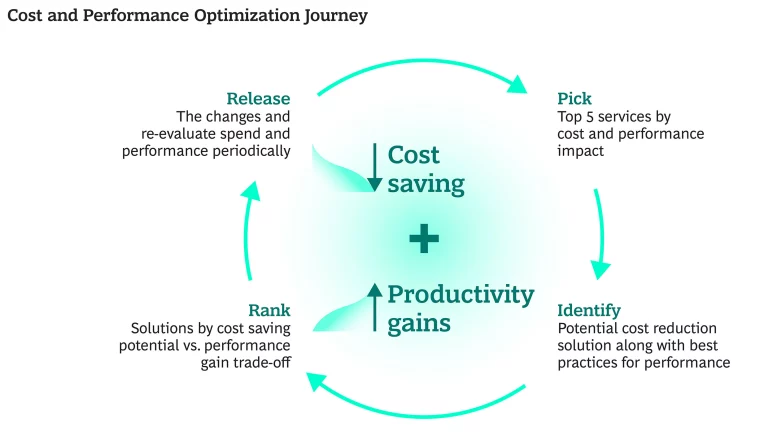

At a high level, effectively using cloud services begins by first identifying the top sources of spend on the cloud and differentiating needed processes from those that are no longer needed or can be scaled down. Changes to these services are then implemented, and the results are monitored.

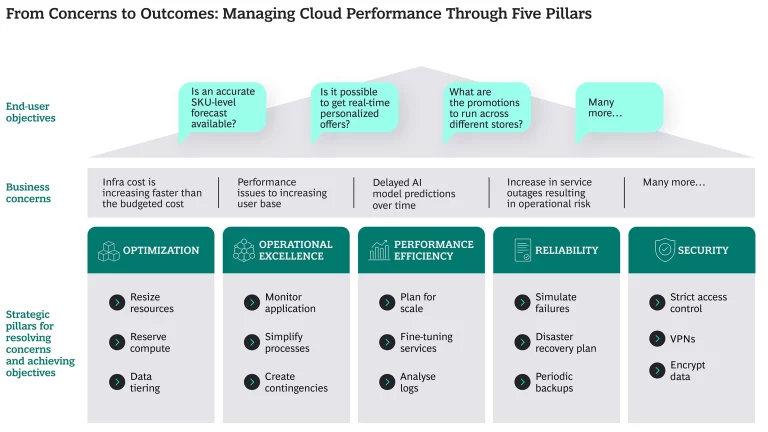

The Strategic Pillars of sustainable cloud optimization

Taking full advantage of cloud services involves using a suite of best practices that have been consolidated into five strategic pillars. These pillars include optimizing increasing costs, gaining operational excellence, increasing performance efficiency, improving reliability, and enforcing security. The BCG X team has found that operationalizing these pillars is typically a non-linear process in which each pillar is revisited on numerous occasions as the larger project advances in time and complexity.

Pillar #1: Optimizing increasing costs

You might wish that the first of these pillars was titled “optimizing costs” instead of “optimizing increasing costs.” But the fact is that when dealing with large-scale data projects, costs will increase over time. The goal, then, is to reduce the rate of cost increases. This is a recursive process because cost increases can be limited as operational excellence improves, performance efficiency increases, as the system becomes more reliable and, to some extent, as appropriate security measures are implemented and more enforced.

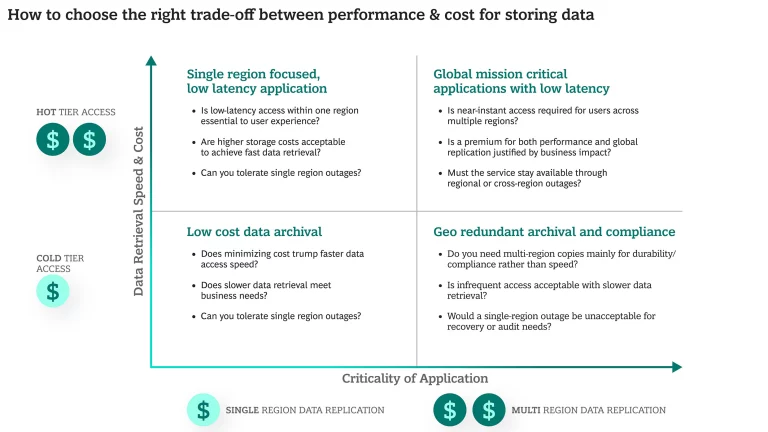

One of the earliest and most important decisions is how and where to store data. Your team’s initial decisions made about data storage will change over time with changes to both the amount of data and how that data is used. At the beginning of a project, an entire data set might consist of 100 gigabytes. Six months later, that might have expanded to 20-30 terabytes and as the data grows, so do storage and compute costs. Bandwidth costs can also increase as more data is transferred from region to region.

There are, however, several ways to manage cost increases. Fundamentally, it is a matter of continually asking whether you need all the compute you’re paying for at any given moment. You should provide only those resources and services required by each participant at each point in time. If, for example, you determine that too many resources are being allocated to what are non-production processes, scale back those resources so you can lower costs while still upholding performance standards. As a rule, it’s advisable to increase or decrease resources as needed, rather than setting resources levels at project start up and then never revisiting those requirements.

You can also manage compute costs by purchasing space from the pool sold on spot instead of reserving rack space. A cost-monitoring dashboard can make such inquiries easier (if you don't have this dashboard, enable it). That's the first step. The second step is to compare. Find out which services are costing more than you’d expected, then find a better solution. By placing your rack space or your computers in the same region as your storage, you may be able to realize significant saving on bandwidth costs while also improving operational efficiency. Because cold storage costs a third to a fifth of normal storage, consider offsetting cost increases by archiving as much data as possible and by pausing compute during weekends for non-essential processes.

Pillar #2: Gain operational excellence

Even though the cost of providing a high level of operational excellence may, to some extent, violate the first pillar, by overcompensating on this second pillar you can ensure that you’re able to closely monitor processes and cadences, quickly identify and act on emerging issues, and automate deployment. Be ready to bring additional resources as needed to maintain high levels of operations without breaching service level agreements so that your service is neither unresponsive nor sluggish during business hours.

Operational excellence also includes making sure that your team understands all the relevant technical terms and what the tech stack is comprises here. At BCG X, we have wide selection of run books that allows us to deal with disruptions. But this requires proper monitoring. To ensure visibility, we suggest keeping extensive tracking details for a minimum of one week. That way, your team can access these details and, with the click of a button, roll back the code to an earlier version. Operation excellence is often a matter of having contingencies and proper monitoring so that you know exactly what to do when problems arise.

Pillar #3: Increase performance efficiency

Performance efficiency is about designing solutions to scale. Because scale tends to grow with time, this can be a very subjective pillar. So, begin by setting a baseline: “This is our current performance level, this is how much time it takes for API calls to hit, or these are the pipelines we can run.” Then look at solutions to ease implementation, which can be as simple as using the cloud provider portal to choose the SKU most appropriate to that moment in time, or changing a backup option by clicking on a radio button. Either of these relatively simple actions can improve efficiency and save thousands of dollars per month—while other improvements might require costly developer hours for code rewrites.

Greater efficiency can also be achieved by digging deep into cloud logs. Jobs in lower environments (those places where the client does not directly interact with production) can often be paused. Instead of running the same job seven times a week, you can achieve a similar level of efficiency by running it just twice a week. From a development perspective, periodicity is not that major an issue: Pausing or delaying jobs can save money without jeopardizing performance efficiency. As we noted with regard to cost optimization, non-production workloads don't need the same amount of resources, so it is possible to lower costs without harming operational excellence.

Pillar #4: Improve reliability

Many of the solutions that the BCG X develops include mission-critical systems. These systems would impact the day-to-day work of our clients if they were to go offline, so they must be extremely reliable. To ensure reliability, run load tests to ascertain which machines are regularly hitting a hundred percent of their quota. Even during non-business hours when some background processes are run, make sure failovers are in place, because things do fail in the cloud. You might not be able to predict that tomorrow at 4:00 PM a system will go offline. But you can predict which services have not been operating at maximum efficiency and make sure systems are in place to ensure that services are sufficient until you can restore full efficiency. In general, disaster recovery plans along with periodic snapshots/backups of services and data are essential to counter any service disruptions.

Pillar #5: Enforce security

All data undergo life cycles. First, information is rarely kept indefinitely. If it is retained, systems must be in place to track who has access to it. If there is a violation, the proper people must be made aware of it. And at the end of a project, a plan must be in place to securely shred data or move it to a new location. All networks should be locked behind VPNs, with vulnerabilities patched as soon as they are identified.

In accomplishing all these security tasks, there is no need to reinvent the wheel each time. Utilize existing cloud-based security services while following a few simple rules: Make sure that no passwords or PII are stored in unencrypted formats, encrypt information whenever possible, and do not retain sensitive information as plain text (even in audit logs). It is important that an additional burden not be placed on developers to follow these rules. Instead, create automated security processes such that when new code is entered, a zero-trust environment system ensures that code will not be deployed until it passes a series of rigorous tests and analyses not mediated by humans.

You can also protect operations by making sure that no single person can harm the system. It does not matter if such harm is done intentionally or unintentionally or done by staff or the client. In a recent BCG X engagement, more than 30 developers were working on the client’s project. Each developer had access to data lakes, and each could create or delete SKUs. The system was designed, however, so that only a select few could delete or start processes inside the production system.

Offset real-world costs with real-world savings

Implemented correctly, the five best-practice cloud-service pillars we have described can achieve remarkable results. In a recent engagement, for instance, the BCG X team moved beyond simply resolving client-raised tickets to proactively applying these pillars reducing the daily batch job runtime by one-third and achieving an additional 33% cost savings (approximately $55,000 per month) through ongoing cloud optimization. Overall, the engagement resulted in annual savings of around $600,000 for the client.

Cloud optimization is a journey of continuous improvement, a non-linear process that must be agile and flexible to accommodate the realities of a client’s changing needs. Initial assumptions that inform the original application design will evolve over time. As such, guidelines must be in place to drive design changes. The five pillars provide this guidance to architects, developers, and solution owners. But they should not be operationalized in a rigid, linear fashion. Rather, their implementation is best prioritized in terms of ROI. What makes sense? What is quickest? What is easiest? What is safest? And because these services are interconnected, they bring with them countless permutations and combinations, they require phased implementation. With each iteration, both spend, and performance should be re-evaluated considering current conditions to achieve optimum cloud service value.